- Topics

- /

- Realtime technologies

- /

- Scaling SignalR: Available options and key challenges

Scaling SignalR: Available options and key challenges

Nowadays users increasingly expect their online experiences to be interactive, responsive, immersive, and realtime by default. And when it comes to building realtime, event-driven functionality for end-users, SignalR is one of the most popular choices available to developers. In this blog post, we’ll cover considerations related to scaling SignalR: how you do it, and the key challenges involved.

SignalR overview

In a nutshell, SignalR is a technology that enables you to add realtime functionality to web apps. You can use it to engineer features such as:

Realtime dashboards.

Live chat.

Collaborative apps (e.g., whiteboards).

Realtime location tracking.

Push notifications.

High-frequency updates (for use cases like realtime multiplayer gaming and streaming live score updates).

SignalR comes in several different flavors:

ASP.NET SignalR - a library for ASP.NET developers. Note that this version is largely outdated (only critical bugs are being fixed, but no new features are being added).

ASP.NET Core SignalR - an open-source SignalR library; unlike ASP.NET SignalR, this version is actively maintained.

Azure SignalR Service - the fully managed cloud version.

Note: This blog post focuses on ASP.NET Core SignalR and Azure SignalR Service. We’re not covering ASP.NET SignalR, which is unlikely to be a good tech choice for building scalable, production-ready systems (since it’s not actively maintained and won’t benefit from any new features or enhancements).

SignalR uses WebSocket as the main underlying transport, while providing additional features, such as:

Automatic connection management.

Fallback to other transports - HTTP long polling and Server Sent Events.

The ability to simultaneously send messages to all clients connected, or to specific (groups of) clients.

Hubs to communicate between clients and servers. A SignalR hub is a high-level pipeline that enables connected servers and clients to invoke methods on each other. Two data formats are supported: a JSON-based protocol, and a binary protocol inspired by MessagePack.

Scaling ASP.NET Core SignalR

We’ll now cover aspects and challenges related to scaling ASP. NET Core SignalR, the open-source library.

Vertical scaling considerations

At first, vertical scaling (or scaling up) seems like an attractive proposition; it’s relatively easy to set up and maintain, as a single server is involved. You might even ask: “how many client connections can a SignalR server handle”? There's no definitive answer, though. The number of connections a SignalR server can handle depends on factors such as the hardware used, or how “chatty” the connections are.

Furthermore, “How many connections can a SignalR server handle?” might not be the best question to ask, and that's because scaling up has some serious practical limitations:

Single point of failure. If your server crashes, there’s the risk of losing data, and your app will be severely affected.

Technical ceiling. You can scale only as far as the biggest option available from your cloud host or hardware supplier (either way, there’s a finite capacity).

Traffic congestion: During high-traffic periods, the server has to deal with a huge workload, which can lead to issues such as increased latency or dropped packets.

Less flexibility: switching out a larger machine will usually involve some downtime, meaning you need to plan for changes in advance and find ways to mitigate end-user impact.

In contrast, horizontal scaling (scaling out), although significantly more complex than vertical scaling, is a more dependable model in the long run. Even if a server crashes or needs to be upgraded, you are in a much better position to protect your system’s overall availability and performance since the workload is distributed across multiple SignalR servers.

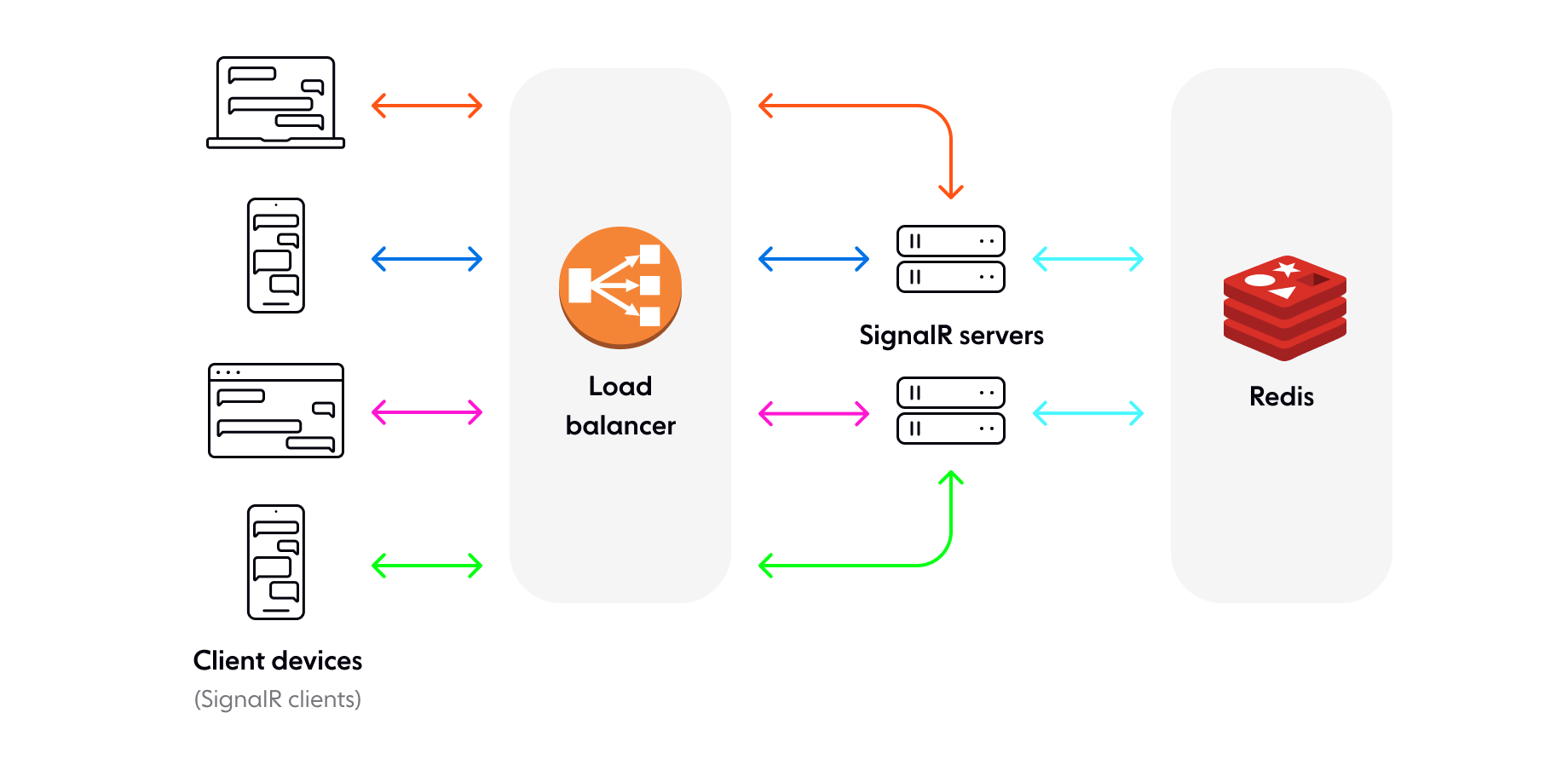

Scaling to multiple servers with Redis backplane

Scaling to multiple SignalR servers means adding a few new components to your system architecture. First of all, a load balancer (or multiple load balancers, depending on the size and complexity of your system). Secondly, you need to use a Redis backplane, to pass SignalR messages between your servers.

The load balancer handles incoming SignalR client connections and distributes the load across the server farm. By design, SignalR servers don’t communicate between themselves, and are unaware of each other’s connections. For example, let’s say we have chat users Bob and Alice. They are connected to different SignalR servers (Bob to server 1, and Alice to server 2). If Bob sends Alice a message, that message would never reach Alice, because server 2 is unaware that Bob has sent a message to server 1.

That’s why you need the Redis backplane; you can think of it as a central message store to which all the servers are simultaneously connected. The SignalR Redis backplane uses pub/sub to keep the server farm in sync. Whenever there’s a new message on a SignalR connection, the SignalR server holding that connection sends the message to the Redis backplane. All SignalR nodes are subscribed to the backplane to receive published messages and forward them to relevant connected clients. Thus, with the Redis backplane, Alice will receive Bob’s message, even though they are connected to different SignalR servers.

What are the challenges of scaling ASP.NET Core SignalR?

It is well known that managing and scaling any WebSocket solution is far from trivial (primarily because WebSocket is a stateful protocol, with persistent connections). With that in mind, we’ll now cover some of the specific challenges you’ll face while scaling ASP.NET Core SignalR.

Sticky sessions impact scalability

If you scale beyond one SignalR server and plan to support HTTP long-polling and Server Sent Events as fallbacks, you will have to use sticky sessions (note that in the Azure App Service you can add sticky sessions through the ARR Affinity setting). That’s because SignalR requires the same server process to handle all HTTP requests for a specific connection.

The problem with sticky sessions (session affinity) is that they hinder your ability to scale dynamically. For example, let’s assume some of your servers are overwhelmed and need to shed SignalR connections. Even if you engineer a system that can (auto)scale dynamically, the dropped SignalR clients would keep trying to reconnect to the same (overwhelmed) servers, rather than connecting to other servers that are available.

It’s much more efficient to dynamically scale your SignalR server layer when you aren’t using sticky load balancing, but that means you can’t rely on any fallback; if the WebSocket transport isn’t supported, then your app would be unavailable to users. It’s a trade-off you will have to consider.

Redis - bottleneck and single point of failure

Although using the Redis backplane is key to scaling horizontally, there are some pitfalls you should be aware of. Here are some of the main issues you might encounter:

Increased latency. Per the SignalR documentation, it’s recommended to deploy the Redis backplane in the same datacenter as the SignalR app; otherwise, you might experience increased network latency that degrades the performance of your system. However, even if Redis and SignalR are hosted in the same datacenter, there’s no guarantee that you’ll only benefit from low latency; the purpose of the Redis backplane is to forward messages (all messages) across the SignalR server farm. When the volume of messages and the number of servers increases, Redis can become a bottleneck.

Single point of failure. Although Redis is a robust piece of engineering, you still have to expect maintenance windows and some unexpected downtime. When your Redis instance is down, your system is severely affected. Some messages will be lost or never reach their destination, as there is no way to keep your SignalR servers in sync.

Managing SignalR in-house is difficult

Building a scalable system with SignalR (or any other similar WebSocket-based solution) in-house is expensive, time-consuming, and requires significant engineering effort. Here are some of the challenges you’ll face and the decisions you’ll have to make:

Managing complex architecture and infrastructure. Things are much simpler when you have only one server to deal with. However, when you scale horizontally, you have an entire server farm to manage and optimize, plus a load balancing layer, plus Redis. You need to ensure all these components are working and interacting in a dependable way.

Managing connections and messages. There are many things to consider and decide: how are you going to terminate (shed) and restore connections? How will you manage backpressure? What’s the best load balancing strategy for your specific use case? How frequently should you send heartbeats? How are you going to monitor connections and traffic?

Designing for high availability. Imagine you're streaming live sports updates, and it's the World Cup final or the Superbowl. How do you ensure your system can handle tens or thousands (or even millions!) of users simultaneously connecting and consuming messages at a high frequency? You need to be able to quickly (automatically) add more servers into the mix so your system is highly available, and ensure it has enough capacity to deal with potential usage spikes and fluctuating demand.

Ensuring fault tolerance. What can you do to ensure fault tolerance and redundancy at regional and global levels?

Successfully dealing with all of the above is far from trivial. It’s safe to expect high financial costs to build the system, and additional ones to maintain and improve it. It also involves significant engineering effort, shifting away focus from core product development.

Check out our report to learn more about the challenges and costs of building and managing WebSocket infrastructure in-house.

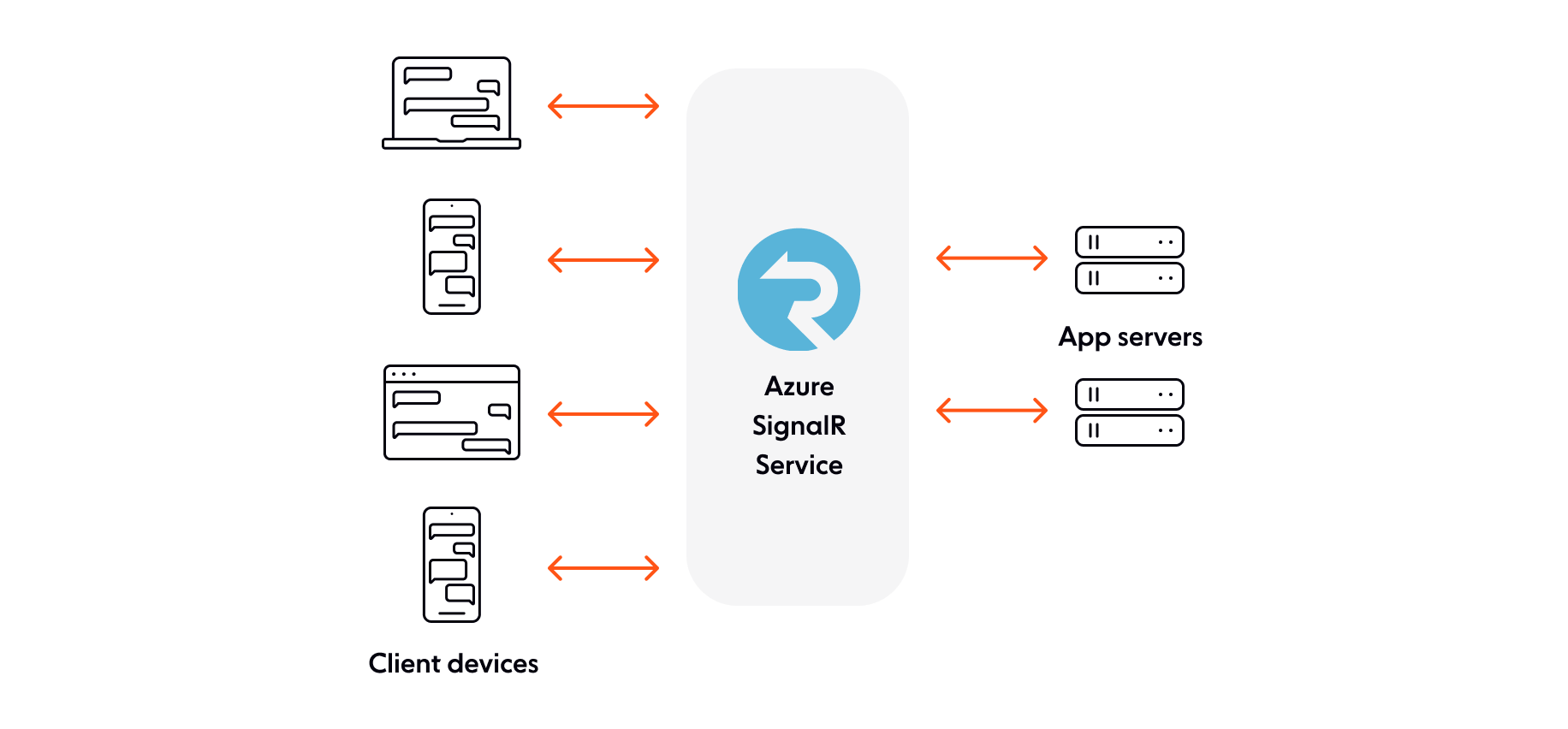

Scaling Azure SignalR Service

If you want to avoid hosting, managing, and scaling SignalR infrastructure yourself, you could use the managed version: Azure SignalR Service. Azure SignalR Service is a proxy for realtime traffic and also acts as a backplane when you scale out to multiple servers. Each time a client initiates a connection to a SignalR server, the client is redirected to connect to the Service instead. Azure SignalR Service then manages all these client connections and automatically distributes them across the server farm, so each app server only has to deal with a limited number of connections.

With Azure SignalR Service, you don’t have to handle problems like scalability and load balancing, and there’s no need to use the Redis backplane. It’s mentioned several times throughout the official documentation that Azure SignalR Service “can scale to millions of client connections”. In addition, it provides benefits such as Azure-grade compliance and security, and it supports multiple global regions for disaster recovery purposes.

Azure SignalR Service limitations

As we’ve seen, Azure SignalR Service removes the burden of having to host and scale SignalR yourself, and claims to be highly scalable. However, building systems at scale is not only a matter of managing infrastructure and handling X concurrent connections. There are other aspects to consider. For example, is the system reliable enough to provide uninterrupted service to users at all times? And does it offer robust messaging QoS guarantees, so that you can build optimal experiences for a large user base? With these in mind, we’ll now look at some of the limitations of Azure SignalR Service.

Single-region design

Azure SignalR Service is a regional service - your service instance is always running in a single region. This single-region design can lead to issues such as:

Increased latency. If you’re building a game or financial services platform, and latency matters to you, then you have a problem if your visitors are not near your servers. If, for example, you have two users playing a realtime game in Australia, yet your SignalR servers are located in Europe, every message published will need to go halfway around the world and back.

It’s hard to ensure resiliency. Azure SignalR Service provides a feature that allows you to automatically switch to other instances when some of them are not available. This makes it possible to ensure resiliency and recover when a disaster occurs (you can even have instances deployed in different regions, and when the primary one fails, you can fail over to the secondary one in a different region). However, you will need to set up the right system topology yourself, and your costs will increase, since you’ll be paying for idle backup instances.

Service uptime

Azure SignalR service provides a maximum 99.95% uptime guarantee - for premium accounts - which amounts to almost 4.5 hours of allowed downtime / unavailability per year. The situation is worse for non-premium accounts, where the SLA provided is 99.9% (almost 9 hours of annual downtime).

These SLAs might not be reliable enough for certain use cases, where extended downtime simply isn’t acceptable. For example, healthcare apps connecting doctors and patients in realtime, or financial services apps that must be available 24/7.

Message ordering and delivery are not guaranteed

Data integrity (message ordering and guaranteed delivery) is desirable, if not critical for many use cases. Imagine how frustrating it must be for chat users to receive messages out of order. Or think of the impact an undelivered fraud alert can have.

If the order in which messages are delivered is important to your use case, then SignalR might not be a great fit, because it does not guarantee ordering. You could make SignalR more reliable by adding sequencing information to messages, but that’s something you’d have to implement yourself.

Going beyond ordering, Azure SignalR Service doesn’t guarantee message delivery- there’s no message acknowledgment mechanism built-in.

Wrapping it up

We hope this article helps you understand how to scale SignalR, and sheds light on some of the key related challenges. As we have seen, scaling the open-source version, ASP.NET Core SignalR, involves managing a complex infrastructure, with many moving parts. The managed cloud version, Azure SignalR Service, removes the burden of hosting and scaling SignalR yourself, but it comes with its own limitations, which we discussed in the previous sections.

If you’re planning to build and deliver realtime features at scale, it is ultimately up to you to asses if SignalR is the best choice for your specific use case, or if a SignalR alternative is a better fit.

About Ably

Ably provides realtime experience infrastructure to power multiplayer, chat, data synchronization, and notifications at internet scale. Our globally-distributed network and hosted APIs (WebSocket-based) help developers engineer realtime features for millions of users.

We offer robust capabilities and guarantees, including 25+ client SDKs targeting every major platform and programming language, feature-rich pub/sub messaging, guaranteed message ordering and delivery, and a 99.999% uptime SLA.

Ably is quite similar to SignalR, in the sense that both allow you to build and deliver live and collaborative experiences for end-users. See how Ably compares to SignalR.

To learn more about what Ably can do for you, sign up for a free account and dive into our documentation.