This blog post tells the story of why and how we migrated from the Node Redis client library to Ioredis.

What is Redis?

Redis is a distributed in-memory database that supports storage of both simple and structured values by key. Being in-memory means that it is both very fast, but also ephemeral: shutting down the Redis servers or the machine it is running on will wipe all stored data. It does offer some snapshot features, but being in-memory means that it has been designed to be fast, not to be a permanent data store. As such, this is the kind of database where you would store tokens for users connected to your website, but not photos of your beloved cat.

How we use Redis at Ably

At Ably we use Redis for short-term storage of a variety of entities such as authentication tokens and ephemeral channel state. We store messages transiently while we are processing them, so such a database is a good fit for us. Redis is a very important tool in our toolbox. Having most of our backend code written in Node.js, it was natural to use the recommended Node Redis client, and so we have relied on it for the last couple of years. It served us well, but we hit some performance issues and thought it was time to look for alternatives.

Starting out with Node Redis

The Node Redis client from https://github.com/NodeRedis/node-redis is both officially recommended by the people behind Redis and one of the oldest clients, which is why we chose it initially.

Our code interacting with Redis uses an abstraction layer adding a few features such as queue operations, delayed operations, retrying on error, transactions, etc. Some of those features are now provided by the client libraries themselves, but were not available at the time. We are also extensively using Lua scripts to support complex atomic operations across multiple keys.

We were doing some performance profiling on one of our server roles that hosted pub-sub channels so as to understand why clusters with high rates of message processing were using more CPU resources than they should have been – and we noticed something unexpected.

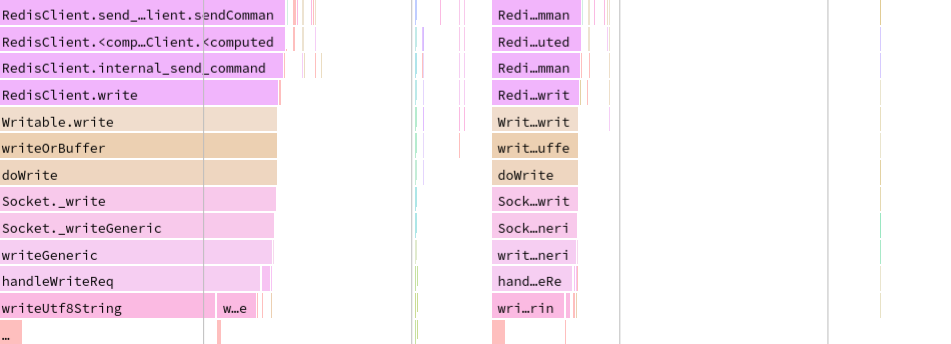

The following extract from a flame graph shows how much time has been spent on every function call.

14% of the server CPU usage (2.7s out of a 20s profile) was spent on writing to the socket as part of sending a command to Redis. This seemed a little on the excessive side. We found out that if even one of the arguments to the Redis command is a buffer, Node Redis performs three separate socket writes per argument every time, plus one for the command string. For a script with 10 arguments, this would be 31 socket writes.

The motivation for this design choice was rational: avoid the cost of making data copies that assembling a single buffer to send could cause. However, at least for our use-case (scripts with large numbers of relatively small arguments), an optimization of copying data to construct a single buffer would be a big improvement. The cost of handling so many smaller writes separately, at all lower layers of the stack, was too high.

This analysis motivated us to look for alternatives. We generally try to fix issues within our open source dependencies, but we first looked around to see if there was an existing alternative Redis client that had designs that were better suited to our use-case. The only two other recommended clients were Ioredis and Tedis. Tedis did not seem to be maintained (having no commits since 2018), so we decided to invest some time evaluating Ioredis.

Investigating Ioredis

Ioredis describes itself as a “robust, full-featured Redis client that is used in the world's biggest online commerce company Alibaba and many other awesome companies”. According to npmcompare it has been around since 2015, so it is five years younger than Node Redis, but seemed to be worked on more actively.

Looking at its source code, we saw that it prepares the command to send in a buffer and performs only one socket write. This looked promising compared to what Node Redis is doing. This motivated us to perform a very quick test of Ioredis’s socket writes. Note that this test is only valid because the work we are trying to profile is performed synchronously with send_command in both cases. The results are as follows:

Using only strings

$ node redis_bench.js ready nrclient 1475 ioclient 1769

Using mostly strings and one buffer

$ node redis_bench.js ready nrclient 15368 ioclient 2733

The strings-only test was our control test. We expected it to be quick with Node Redis since it only performs once socket write in that case. When using mostly strings and one buffer, we could see that the difference in time spent is noticeable. This difference motivated us to perform an experimental migration and more in-depth load testing.

Migrating to Ioredis

We usually prefer an iterative approach to implementing new features, as opposed to all at once. This allows us to debug issues as they arise, and it’s easier with smaller chunks of code per test. With this migration from Node Redis to Ioredis however, this posed some hurdles since we were using a few abstractions that made running both Redis clients at the same time difficult.

Our approach was to start with replacing/renaming all functions and parameters using the handy Ioredis guide on migrating from node_redis. This first step was simple, as the Ioredis API is quite close to the Node Redis one. Translating transaction calls was a bit more challenging though, as we were using a class that inherited from Node Redis’s Multi class, while Ioredis only provides multi(), which is a function. The rest of the migration was relatively simple, including removing redundant features like the operation queue and retry mechanism we did not need anymore.

Our internal continuous integration (CI) allowed us to find a few issues. First, there are a few places where the APIs of Ioredis and Node Redis are different. The "compatible" version of the API would automatically coerce all buffer results to strings, which we didn't want. This did result in some hard-to-find bugs, and required us to migrate to use APIs that didn't perform this conversion. Second, for script evaluation, in particular, there were bugs in the implementation. This issue had previously been found, and a PR made, but this had not yet been merged.

Once our Typescript code compiled successfully and local tests were passing, it was time to run some load tests on our dedicated environment.

Load testing Ioredis

At Ably, we usually test locally first, on the developer’s computer. We maintain an extensive set of tests that can be run locally. Doing so ensures engineers can test their code quickly when working on something, instead of having to wait for the code to pass through CI. Once everything passes local tests, we then send the code to a new Git branch and let the CI run it through its tests.

Once that passes we then run the code in our testing environment: we simulate some traffic following some pattern, depending on the feature that is being tested. We then measure the CPU and memory usage (amongst other metrics) to evaluate what impact the new feature will have.

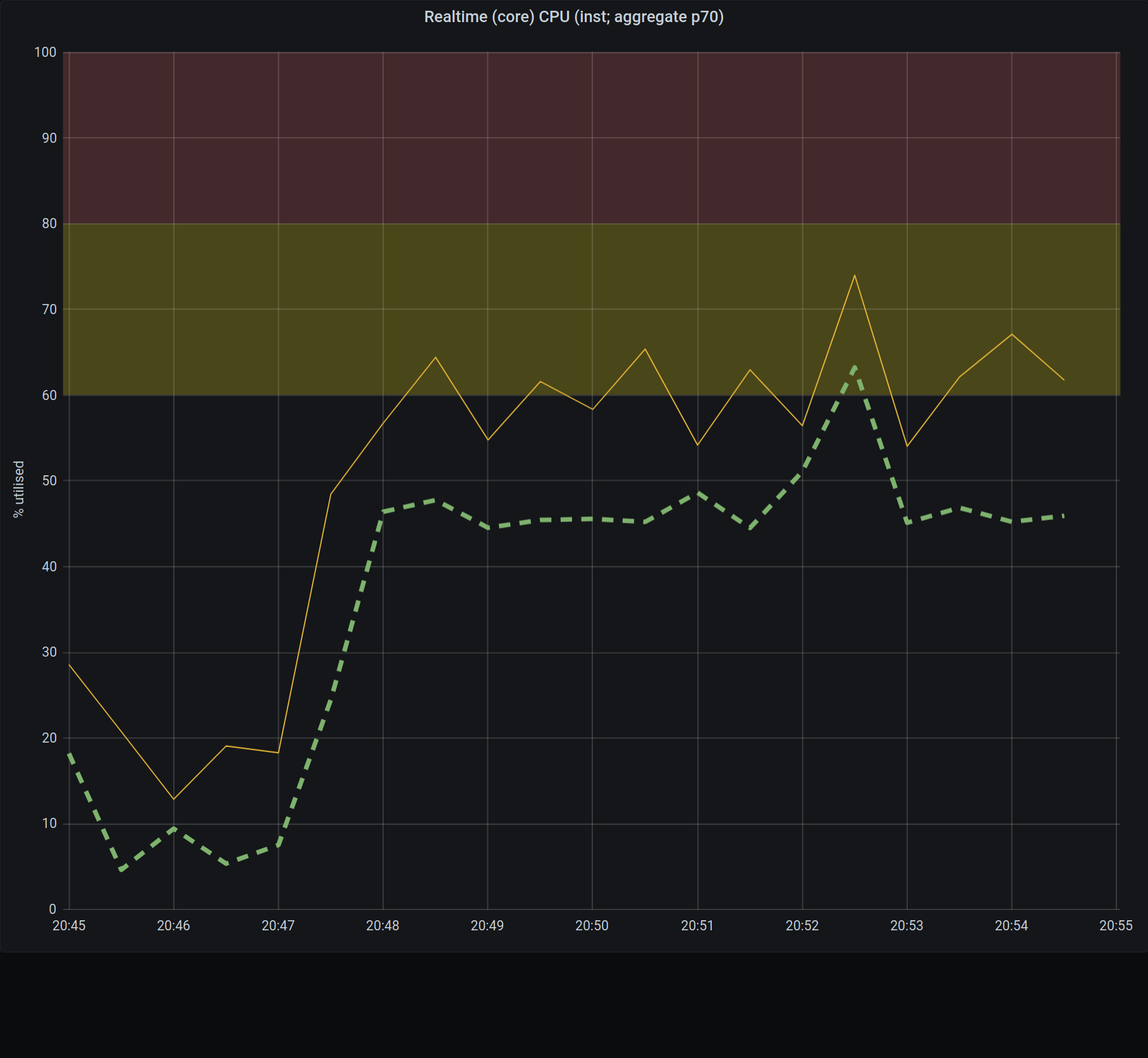

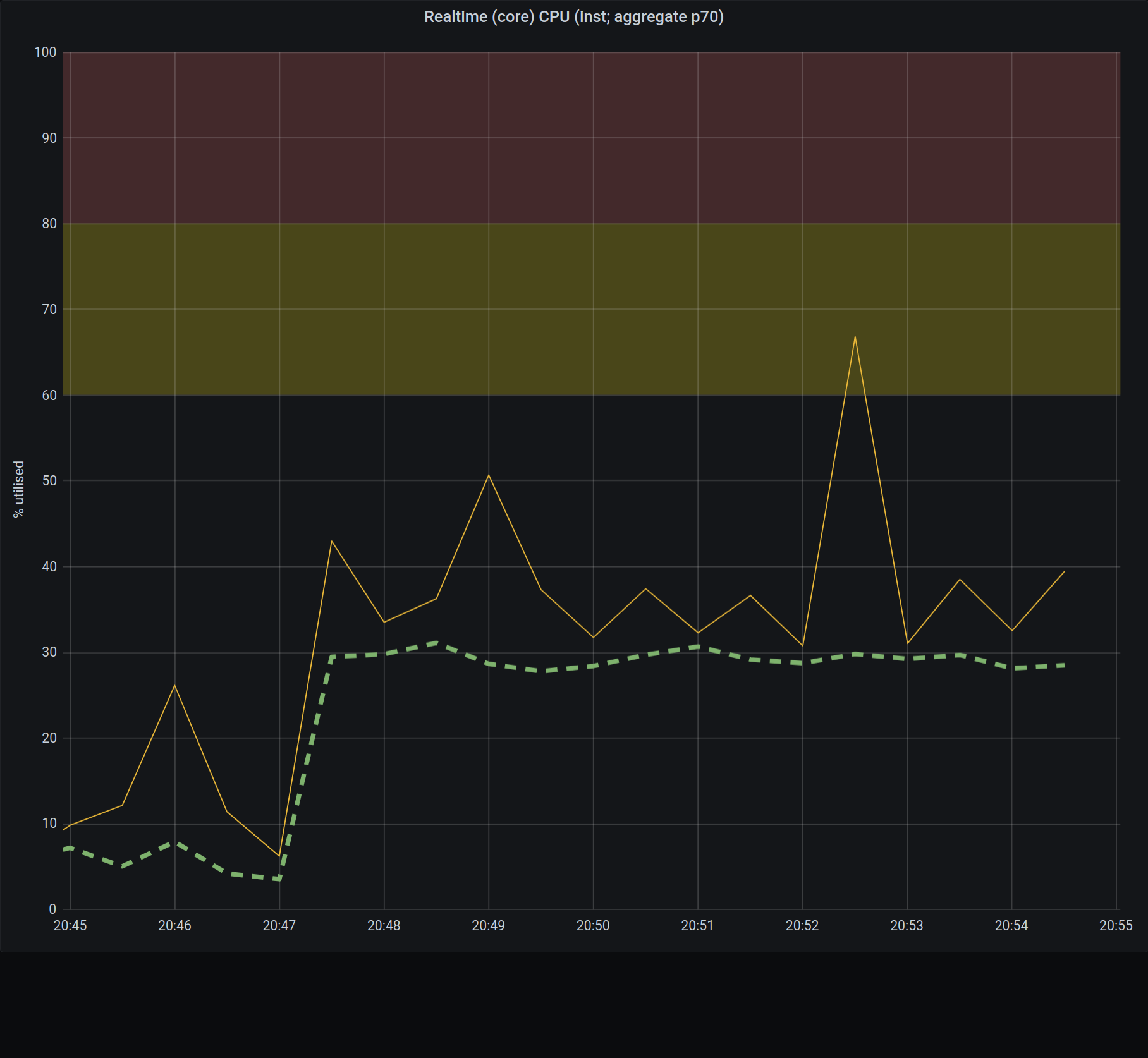

For this test, we decided to simulate 128 requests per second by 16 clients. Each message contained 100 bytes of payload. The following graph shows the CPU usage on one of our instances. The initial increase is due to the test starting, with a CPU usage becoming stable at around 65%.

The two figures above show the CPU usage before and after the migration. We can clearly see a reduction of 30% CPU usage for the same message flow.

This results of this load test showed us that this migration should provide a significant impact on CPU usage. Production statistics have further confirmed this.

Final thoughts

The original Node Redis authors had attempted to do the right thing when handling buffers (i.e. avoiding data copies) but this had in fact led to situations where the performance was considerably worse. This is not optimal for us because we are calling Redis functions that have many arguments of small size and thus perform many socket writes.

We performed some standalone testing of Node Redis vs Ioredis, and Ioredis demonstrated a marked performance improvement, chiefly because it copies all arguments in a buffer before sending the batch. This means fewer socket writes and more copies. The latter is fortunately of no consequence to us since our arguments never reach a size of concern. Additionally, the results extended to performance improvements in production with a full workload.

We hit some snags during the migration, but this is (regrettably) to be expected. Once we migrated the code, we performed some real load tests of CPU usage. The results were encouraging.

As for Node Redis, we noticed that most dependencies are still live code that is being actively worked on, and the level of maturity is not uniform: some features are widely adopted and well tested, but others are not. This is workable provided:

- You anticipate those issues and make sure you evaluate and test the specific features or configurations you intend to use; and

- Adopters contribute back to the community to help mature and maintain the software.

As such, while Node Redis and its underlying architectural choices can be an excellent choice for specific use cases, these use-cases aren’t ours, and Ioredis fits the bill much better.

Latest from Ably Engineering

- Stretching a point: the economics of elastic infrastructure ?

- A multiplayer game room SDK with Ably and Kotlin coroutines ?

- Save your engineers' sleep: best practices for on-call processes

- Squid game: how we load-tested Ably’s Control API

- How to connect to Ably directly (and why you probably shouldn't) – Part 1

- Migrating from Node Redis to Ioredis: a slightly bumpy but faster road

- No, we don't use Kubernetes

- Achieving exactly-once delivery with Ably

About Ably

Ably is a fully managed Platform as a Service (PaaS) that offers fast, efficient message exchange and delivery, and state synchronization. We solve hard engineering problems in the realtime sphere every day and revel in it. If you are running into problems trying to massively, predictably, securely scale your realtime messaging system, get in touch, and we will help you deliver seamless realtime experiences to your customers.