Fan expectations pose an existential threat to fan-centric businesses. And that's because what it means to be a fan has changed. Fandom used to be watching the Monday night game on TV or getting tickets for a concert when your favorite artist rolled through town.

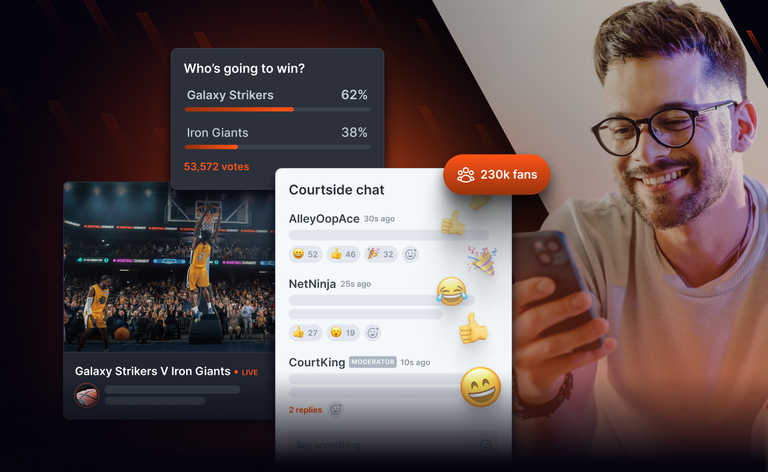

But social platforms have made us all into content creators. And now fans want to play an active role. Increasingly, they expect ongoing interactive experiences that connect them with the creators, sports teams, and artists they love - and in some cases with millions of other fans. This has created an enormous opportunity for fan-centric organizations to open new revenue streams and strengthen their relationships with their audiences. As a result, we are witnessing a period of accelerated change and innovation in the experiences that fan-centric brands deliver.

However, companies face technological challenges that risk making these new experiences economically infeasible. In particular, the wrong architectural choices can mean that the cost of serving each new fan increases exponentially - making the most successful events become the least commercially viable.

Fortunately, with the right design decisions, fan-centric organizations can build fan experiences that succeed at scale. Here, I'm going to share what we've learned from helping broadcasters, artists, and sports organizations the world over make realtime fan experiences economically viable.

The exponential scaling problem

So, what is it about modern fan engagement that leads to this problem of exponential growth in costs? The core challenge comes from user generated content (e.g. chats, comments, and reactions) and, in particular, the need to deliver potentially huge volumes of data to each and every fan. Let's put it in context to understand why.

Traditional broadcasting operates under a fixed cost model, meaning the expenses for infrastructure—ranging from satellite trucks to broadcast towers—stay the same regardless of audience size. Whether a football game is viewed by ten people or ten million, these initial costs remain unchanged. Once the broadcast signal is transmitted, it can be accessed by anyone within the service area. Therefore, while the up-front investment is considerable, there are no additional costs as the viewership within the geographic region increases.

In contrast, streaming economics are variable and scale with audience size. Each new viewer adds incrementally to the total cost, as streaming requires additional bandwidth and server capacity per viewer. But as the number of viewers increases, economies of scale kick-in. Content delivery networks (CDNs), media servers placed within ISP networks, and bulk bandwidth purchasing reduce the cost per viewer as the audience grows. That's what made it economically viable for Indian over-the-top (OTT) streaming service Disney Hotstar+ to serve a record breaking 59 million concurrent viewers during the 2023 Cricket World Cup.

As fans now engage with potentially millions of other fans, the data requirements necessitate a complete rethink of the economics.

Both broadcasting and streaming are one-way, whether you're paying for geographic reach or per minute streamed. But interactive fan experiences turn everyone into a data generator. As more fans interact with each other, the number of interactions increases exponentially—this is the N-Squared problem.

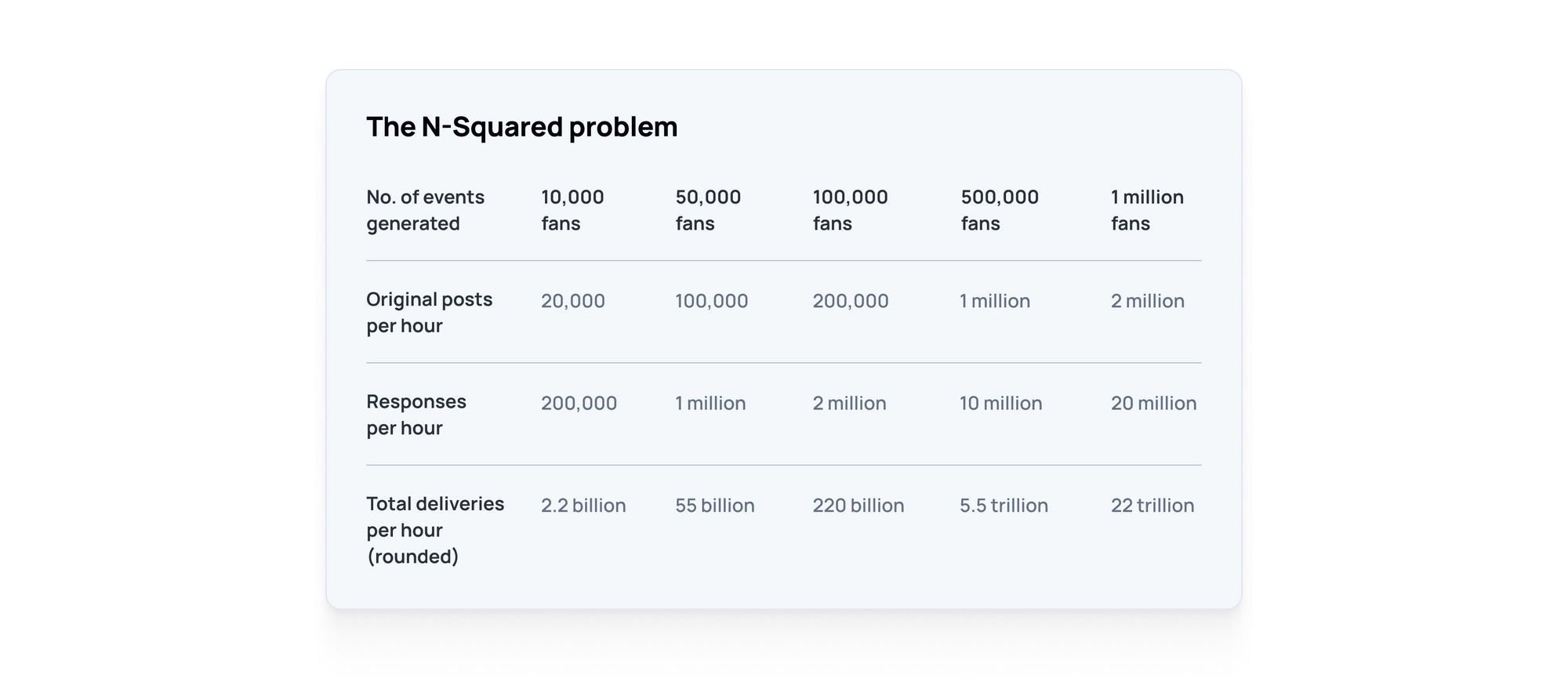

The N-Squared problem in numbers

Unlike video streaming, where costs per viewer decrease, the cost of message delivery in a many-to-many environment grows exponentially. Instead of economies of scale, success means higher costs.

Let's use the example of an interactive fan experience during a tennis match. Alongside the livestream, a chat-like feature allows fans to post messages and reactions. We have 10,000 fans watching the livestream and participating in the interactive experience.

If one fan posts a message predicting the outcome of the match, it must be delivered more or less instantly to the other 9,999 viewers. Things become complex when the other fans start responding. Even if just 1% of the other fans—100 people—react with emojis or replies, each of those 100 responses must be sent to all 9,999 other fans. This results in nearly a million message deliveries, all stemming from a single fan’s message and a small number of responses.

Let's up the ante. With 100,000 fans, each posting twice and 1,000 of them reply to each original post, we'd see 2 million responses totalling 220 billion message deliveries!

But, of course, we're not just talking about one fan posting once. If the average fan posts two original messages each hour and, in turn, each post generates ten responses, delivering everything to every fan gives us some very large numbers.

This leads us to an almost existential question for realtime fan experiences: is it possible to deliver them to growing numbers of fans in an economically viable way?

Serving fans and the bottom line

Delivering fan engagement experiences isn't optional, so we need to find a way to make it work financially.

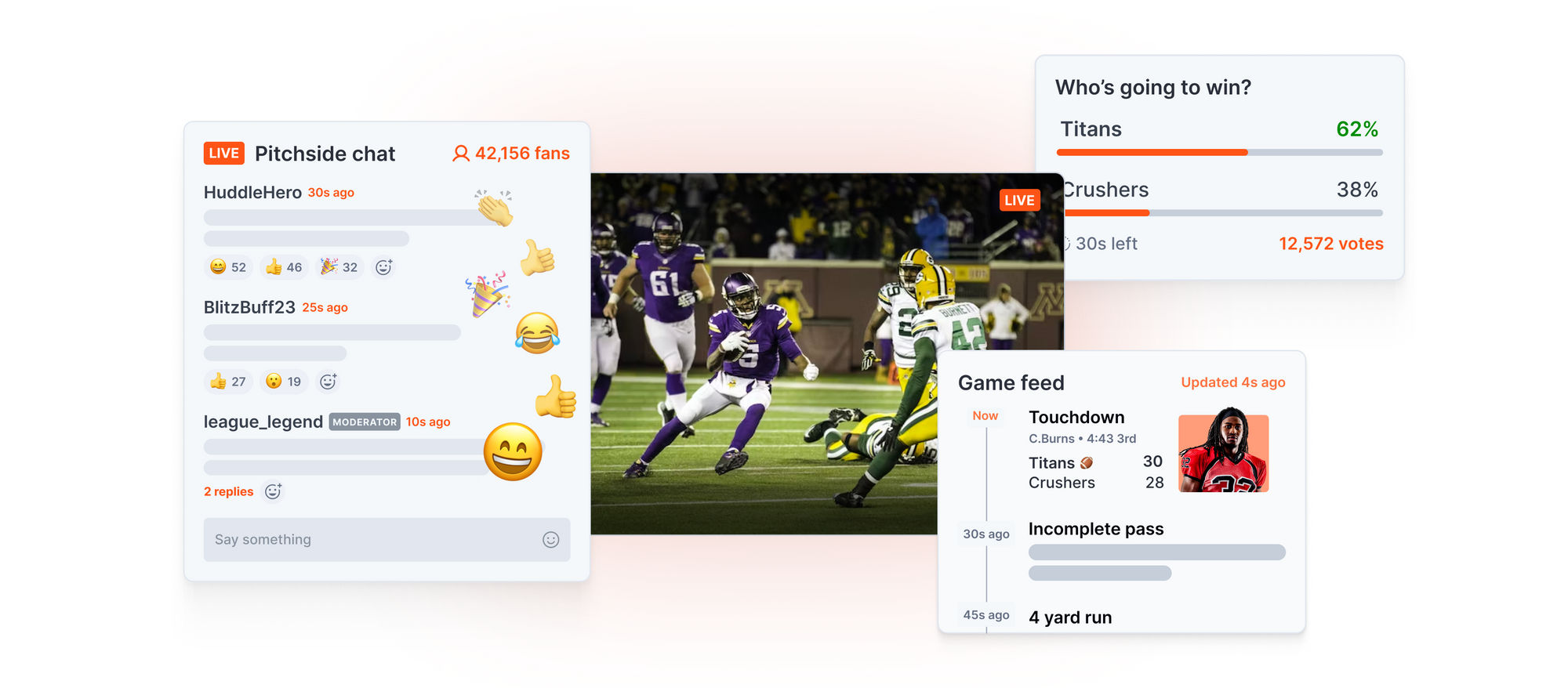

Live events like the Super Bowl or the Eurovision Song Contest draw massive international audiences. Many of those people feel that their experience is incomplete if they're just passive viewers. More and more, people's enjoyment of an event is tied up in their ability to interact with other fans in realtime.

So, how do you overcome the N-Squared problem in order to arrive at a predictable cost per user per hour?

The answer lies in the architectural decisions that feed into your fan engagement platform. Understanding the key challenge of fan engagement is crucial—that user-generated content causes costs to rise exponentially as more people join the experience. Knowing this, we can proactively design our systems to scale linearly rather than exponentially.

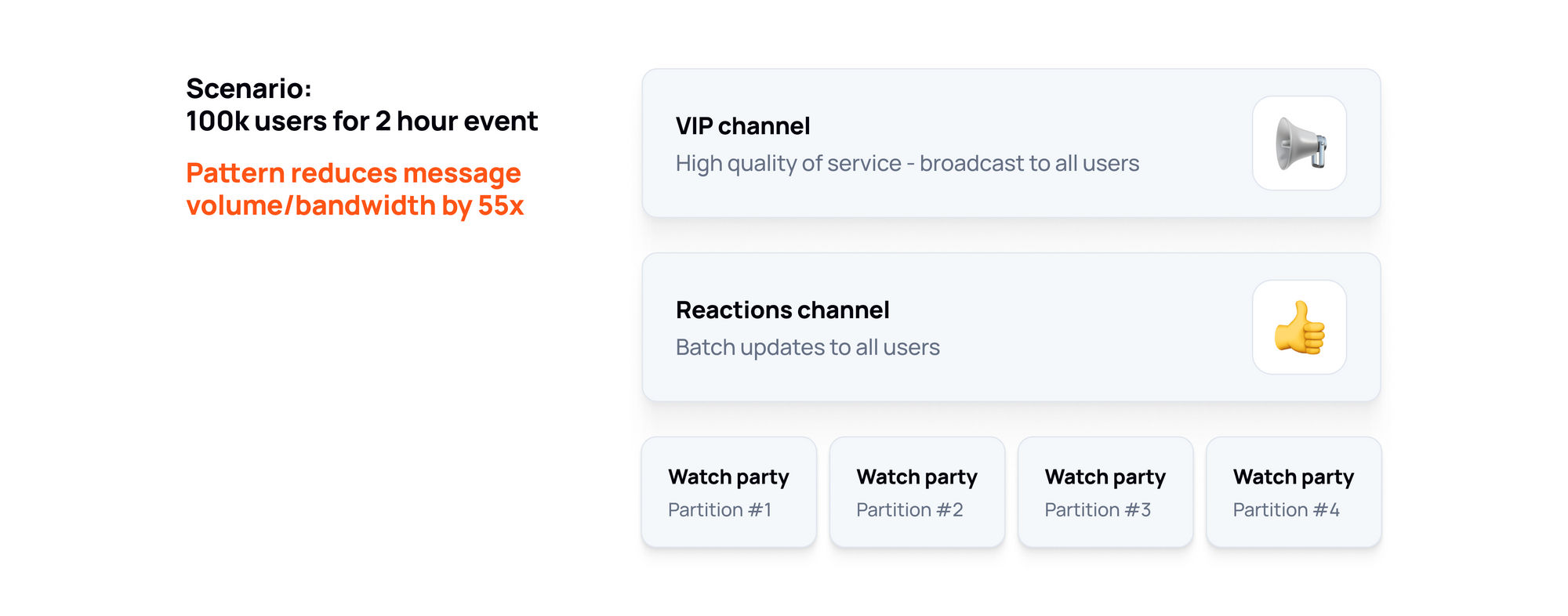

In particular, three architectural patterns hold the answer:

- Batching: This is where you deliver groups of messages together rather than sending each message individually.

- Aggregating: With aggregation, you compute what has changed and send only the results rather than the individual updates, reducing the overall amount of data transferred.

- Partitioning: An approach based on segmenting the fanbase into sub-groups, so that messages are delivered to a predictable number of fans rather than to every fan every time.

Both approaches break the link between the number of fans engaged and the number of messages we must deliver. Let's look at them in some more detail.

Batching: balance immediacy with efficiency

The N-Squared problem arises when we attempt to deliver messages and other interactions among a growing number of fans. In part, that rests on the assumption that every message must arrive as quickly as possible. But that isn't true. Here's why:

- Not all messages are created equal: A goal notification needs to be delivered as quickly as possible but a slight delay in seeing reaction emojis—whether they appear instantly or half a second later—likely won't affect anyone's experience.

- Human perception threshold: There's a natural limit to how fast we can perceive information. Especially when fan attention is directed elsewhere, it's unlikely that the difference between a single message delivered in 200 milliseconds (ms) versus waiting a little longer so that two or three messages can be delivered together would be noticeable.

By batching messages together we can significantly reduce the number of messages the system needs to send. This results in slight delays to message delivery, but as mentioned above it’s unlikely to degrade the fan experience.

There are several strategies for batching, each coming with its own pros and cons. Which you choose is less a technical decision and more about what type of experience you want to offer. In practice, you might combine them to help you balance fan experience with the cost to deliver the service.

Time-based batching

One way to get that fixed cost per user is to send messages at set intervals. With reactions in particular, they're often happening in a narrow window. Let's say there's a big event in the livestream, such as a sports team scoring a goal or a musical artist playing a fan favorite song. Reactions will take place around the same time, so a lot of these events fire in very close succession.

Rather than send each reaction individually, we can queue them and send them in batches every 500ms or whatever time interval is appropriate. That will introduce a little latency but that's the trade-off for achieving a predictable cost per user.

Priority based batching

One way to address the latency that comes with time-based batching is by organizing messages according to their importance. This method ensures that critical updates, like a goal scored in a sports match or an important announcement during a live concert, are delivered immediately.

High-priority messages bypass the regular queue through a system of tiered batching, where essential messages are sent on a more frequent schedule than those of lower priority.

Quota-based batching

Another way to address the latency introduced by time-based batching is to send messages once a certain number is in the queue. In quota-based batching, messages are accumulated until they reach a predefined limit, at which point the batch is sent.

During quieter periods, this method can introduce more delay, though, because messages must wait until the quota is filled before being sent. Another potential issue is that, in busier times, quota-based batching might make it harder to predict costs per user. As audience interaction spikes, the predefined quota is reached more quickly, resulting in more frequent batch dispatches.

Aggregating messages

Batching works because it reduces the number of messages the platform needs to send. But it can’t solve the N-Squared problem entirely. That's because it doesn't affect the overall size of the data the system needs to process.

Here's why:

- Message sizes become unwieldy: As the number of people engaged in an experience grows, there comes a point where we start to hit the practical limits of how much bandwidth a single device can reasonably consume.

- Bandwidth isn't free: Although we're lowering resource usage by reducing the number of messages sent, the system's bandwidth usage still increases as more people join. So, at larger scales the N-Squared problem presents itself in a different way.

What we need is a way to reduce the overall amount of data transferred. Instead of just batching messages together, we can adopt a more strategic approach known as aggregation.

With aggregation, we compute what has changed and send only the results rather than the individual updates.

Let's go back to our tennis match example. Imagine we have four fans, one of whom sends a clapping emoji, while the other three send a thumbs-up emoji. Instead of emitting four emojis, we can send the thumbs-up emoji with a count of three, and the clapping emoji with a count of one. Even at this small scale, aggregation halves the volume of data we need to send.

But it becomes even more effective as fan numbers grow. Let's say we now have 16 fans taking part. Seven send a thumbs-up and nine send a clapping emoji. Despite the increase in fans, we still need to send just two messages: clapping: 9 and thumbs-up: 7. That gives us a compression ratio of 87.5%.

Computing changes in this way and then batching them together effectively eliminates the N-Squared problem, giving us a fixed cost per user regardless of the number of fans. This makes the cost of delivering realtime fan experiences more predictable, and more economically viable.

Partitioning

Partitioning is another strategy for addressing the N-Squared problem. The idea is to divide fans into separate groups or rooms so that we reduce the overall number of messages the system needs to deliver. Ideally, partitioning should be seamless, meaning individual fans enjoy the experience without being aware of the segmentation.

To explore how partitioning works, let's imagine an event with 1 million people taking part in the fan engagement. When one person posts a chat message that results in 999,999 deliveries. If just a fraction of the other participants responds, say 1,000 people, that then requires sending those 1,000 responses to the remaining 999,999 viewers. This results in nearly 1 billion message deliveries (1,000 responses × 999,999 recipients).

However, if we split the fanbase into separate groups then we can enforce an upper limit on how many messages we need to send. Instead of a single 1 million-person experience, we could run ten groups of 100,000 each. From a fan's perspective, the core interaction – sharing excitement – remains the same.

But from a cost perspective, the number of message deliveries drops dramatically, enabling the cost per user to remain the same. This contrasts to unpartitioned audiences, where the cost per user increases with audience size. Smaller partitions would make the cost per user even lower.

Smaller partitions would have an even greater impact. The lower pace of messages in a smaller partition probably offers a better fan experience since the number of messages they receive becomes more manageable. But, of course, this comes with trade-offs, too. While a fan probably doesn't care if they see 1,000 or 10,000 cheering emojis, they might notice the absence of a friend or a popular community figure (VIP).

Both can be solved for. For VIPs, their messages could be broadcast across all partitions, maintaining their visibility and influence across the entire fanbase. For applications where having friends together is key to the experience, functionalities such as “watch parties” can be introduced so that friends can partition themselves, instead of being partitioned by the app.

Solving the realtime fan engagement problem at Ably

At Ably, we've worked with sports franchises, creators, entertainers, and broadcasters globally - including NASCAR, Genius Sports, Tennis Australia, and SRF - to help deliver reliable, scalable, low-latency fan experiences that provide a healthy return on investment. The infrastructure that we provide already makes our platform well suited to the fan engagement experiences of these customers. But we’ve been doing a lot of thinking about what more we can do to overcome the unique challenges of delivering fan engagement experiences in an economically viable way. This includes us trying to solve the N-Squared problem.

So, we have been actively revising our pricing model and building new features based on some of the architectural patterns mentioned above. As a result, we will be introducing:

- Message batching: This feature is designed to optimize costs and enhance performance in large-scale, high-throughput applications.

- LiveObjects: This feature moves the aggregation problem down into the platform and API layer of our stack. It allows you to compute what’s changed and to only emit the computed change as opposed to the batch of each individual change.

- Partitioning: We are at early stages of developing a partitioning solution and are actively talking to customers now on how best to design that. Please reach out to find out more, we’d welcome further insights.

- Consumption-based pricing that’s billed by the minute: Our pricing model gives you granular control over your costs. You only pay for what you use: messages sent, channels active, and connections made). This means you can budget effectively and avoid surprise charges. Ideal for limiting the costs of peaky high volume traffic surges.

Fundamentally, we want to make sure your success isn’t costly. If you are interested to see how you could benefit from working with Ably, get in touch and book a session with one of our sales engineers - or try our platform for free by signing up for an account today.