Interest in online banking is skyrocketing. In this context, more and more banking providers are building digital products (especially mobile offerings) and improving their core capabilities to meet user expectations of the instantaneous, always-on, realtime world.

In this blog post, we will look at Kafka’s characteristics and explore why it’s such a popular choice for architecting event-driven realtime banking ecosystems. We will then see how Ably complements and extends Kafka to end-user devices, enabling you to create dependable realtime banking apps.

Banking apps and realtime

From a technology perspective, the banking sector has changed significantly over the past decade and continues to undergo intense digital transformation. Before the 2010s, SMS banking and mobile web were some of the most popular ways to deliver banking experiences. However, with the development of smartphones and the wide-scale adoption of iOS and Android operating systems, mobile banking applications began to appear.

Nowadays, there’s increasing focus on building mobile-first (or even mobile-only) apps, as traditional banks everywhere are improving their core capabilities to meet user expectations of the instantaneous, always-on, digital world. Of course, the banking sector has been taken by storm by so-called neobanks (or disruptor banks) - organizations such as Monzo, Revolut, N26, Chime, or Nubank, that are developing entirely digital, cloud-based mobile banking products.

With people around the world sheltering at home due to the global pandemic, 2020 has further accelerated the transition of banking and financial services to digital channels and has increased the demand for banking apps. According to a report published in October 2020, the number of sessions for banking and payment apps combined increased by an average of 26% in 2020 compared to 2019 (note that in some countries the increase was significantly higher - 142% for Japan, as an example).

In the context of banking apps, we must, of course, consider the importance of realtime. After all, banking is an industry that often deals with critical and time-sensitive data that needs to be acted upon quickly, to make executive decisions in the moment. Furthermore, end-users increasingly expect digital experiences to be responsive and immediate.

To deliver banking apps that are truly realtime, you need to use an event-driven architecture (EDA), which enables data to flow asynchronously between loosely coupled event producers and event consumers. When it comes to building dependable EDAs, Apache Kafka is one of the most popular and reliable solutions worth including in your tech stack.

Why Kafka is a great choice for banking apps

According to its official website, Kafka is used by 7/10 largest banks and finance companies worldwide, including Goldman Sachs, Rabobank, ING Bank, JPMorgan Chase, Capital One, and American Express, to name just a few.

Created about a decade ago, Kafka is a mature and open-source distributed event streaming platform that is used to build high-performance event-driven pipelines. Kafka uses the pub/sub pattern and acts as a broker to enable asynchronous event-driven communication between various backend components of a system.

Let's now briefly summarize Kafka's key concepts.

Events

Events (also known as records or messages) are Kafka's smallest building blocks. To describe it in the simplest way possible, an event records that something relevant has happened. For example, a user has paid a bill. At the very minimum, a Kafka event consists of a key, a value, and a timestamp. Optionally, it may also contain metadata headers. Here's a basic example of an event:

{

"key": "John Doe",

"value": "Made a payment of $50 to Internet provider"

"timestamp": "Jan 20, 2021, at 15:45 PM"

}

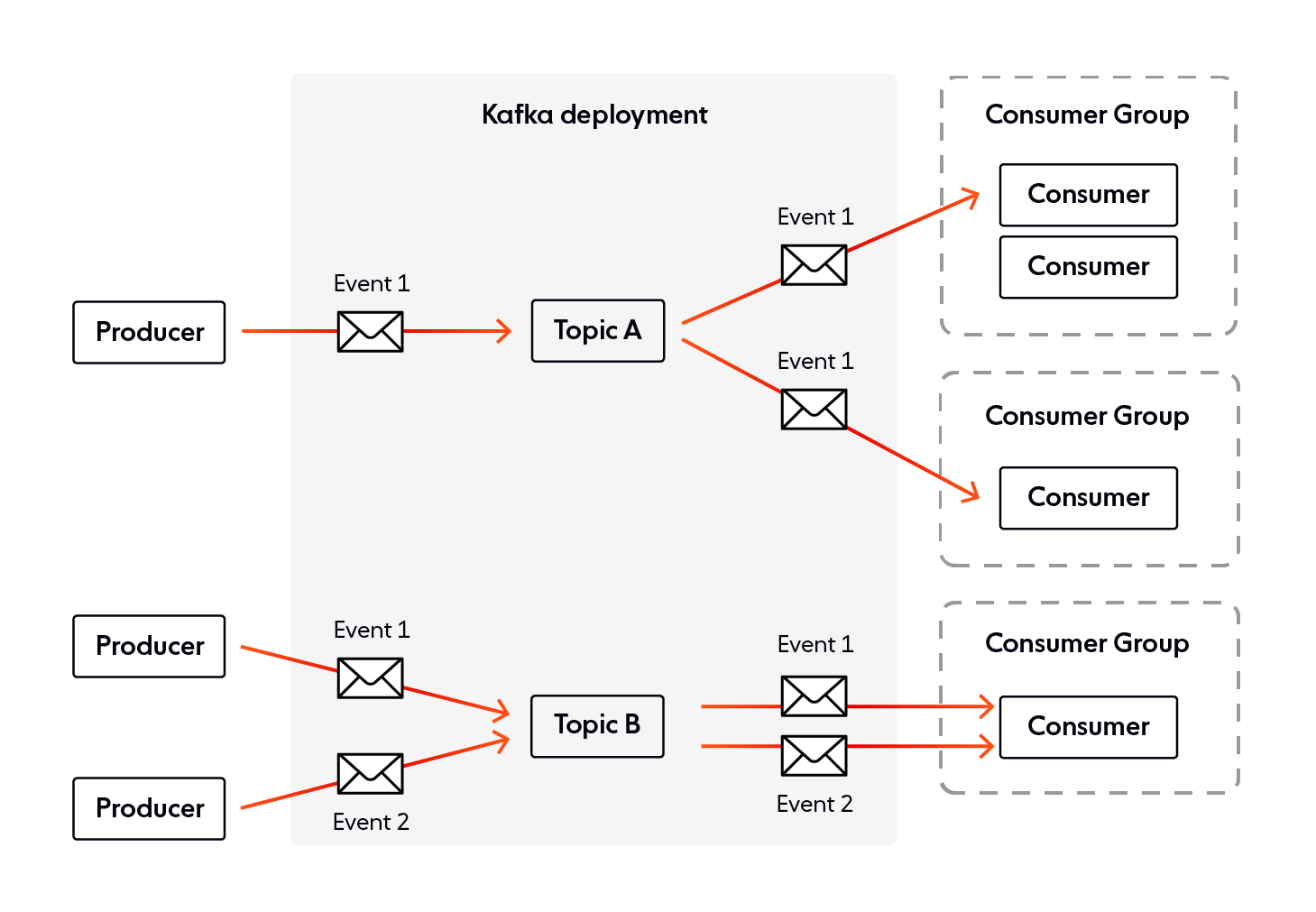

Topics

A topic is an ordered sequence of events stored durably, for as long as needed. The various components of your backend ecosystem can write and read events to and from topics. It's worth mentioning that each topic consists of multiple partitions. The benefit is that partitioning allows you to parallelize a topic by splitting its data across multiple Kafka brokers. This means that each partition can be placed on a separate machine, which is great from a scalability point of view since various services within your banking system can read and write data from and to multiple brokers at the same time.

Producers and consumers

Producers are services that publish (write) to Kafka topics, while consumers subscribe to Kafka topics to consume (read) events. Since Kafka is a pub/sub solution, producers and consumers are entirely decoupled.

Kafka ecosystem

To enhance and complement its core event streaming capabilities, Kafka leverages a rich ecosystem, with additional components and APIs, like Kafka Streams, ksqlDB, and Kafka Connect.

Kafka Streams enables you to build realtime backend apps and microservices, where the input and output data are stored in Kafka clusters. Streams is used to process (group, aggregate, filter, and enrich) streams of data in realtime.

ksqlDB is a database designed specifically for stream processing apps. You can use ksqlDB to build event streaming applications from Kafka topics by using only SQL statements and queries. As ksqlDB is built on Kafka Streams, any ksqlDB application communicates with a Kafka cluster like any other Kafka Streams application.

Kafka Connect is a tool designed for reliably moving large volumes of data between Kafka and other systems, such as Elasticsearch, Hadoop, or MongoDB. So-called “connectors” are used to transfer data in and out of Kafka. There are two types of connectors:

- Sink connector. Used for streaming data from Kafka topics into another system.

- Source connector. Used for ingesting data from another system into Kafka.

Kafka’s characteristics

Kafka displays characteristics that make it an appealing solution for developing realtime banking apps:

- Low latency. Use cases like fraud detection, realtime payments, and stock trading require fast data delivery. Kafka provides very low end-to-end latency, even when high throughputs and large volumes of data are involved.

- Scalability & high throughput. Large Kafka clusters can scale to hundreds of brokers and thousands of partitions, successfully handling trillions of messages and petabytes of data.

- Data integrity. You can reliably use Kafka to support the most demanding mission-critical banking use cases, where data integrity is of utmost importance. Kafka guarantees message delivery & ordering and provides exactly-once processing capabilities (note that Kafka provides at-least-once guarantees by default, so you will have to configure it to display an exactly-once behavior).

- Durability. In the banking industry, losing data is unacceptable for the vast majority of use cases. However, Kafka is highly durable and can persist all messages to disk for as long as needed.

- High availability. Kafka is designed with failure in mind (which is inevitable in distributed systems) and fail-over capabilities. Kafka achieves high availability by replicating the log for each topic's partitions across a configurable number of brokers. Note that replicas can live in different data centers, across different regions.

Kafka realtime banking use cases

Kafka can be leveraged to deliver various types of realtime banking experiences.

Fraud detection

By using Kafka as an integral part of your backend banking ecosystem, you can efficiently detect fraudulent transactions as they happen, in real time, and take the appropriate steps to ensure the security and integrity of customers’ accounts. The vast majority of legacy fraud detection systems are hard to scale up to process the ever-growing amount of data needed for realtime detection of fraudulent behaviors. Kafka, on the other hand, can scale to handle huge volumes of data.

Payment processing

Legacy banking systems usually resort to batch processing when it comes to payments. In comparison, by using Kafka as the backbone of your banking system, you can replace batch processing with realtime payment processing. This way, you provide a more efficient and more streamlined payment process, significantly increasing user experience and satisfaction.

Analytics dashboards

Most modern banking apps offer functionality that enables their users to quickly view various stats related to their account, such as the amount of money they’ve spent over the past month. Since Kafka is an event-driven solution that records events as soon as they occur, you can leverage its power to ensure end-user analytics dashboards are always updated in real time with the most recent stats.

In-app support

The vast majority of mobile banking apps come with in-app support features, such as peer-to-peer chat, enabling customers to communicate with bank support personnel in real time. Kafka is an excellent choice as the backend event streaming component of your in-app chat solution, even when high numbers of concurrent users are involved. That’s because Kafka provides high throughput over low latencies, and it comes with data integrity guarantees - message ordering and exactly-once semantics.

Alerts & notifications

Realtime alerts & notifications are essential for any mobile banking application. Just think how critical it is to inform a user when there’s a suspicious activity involving their account, such as a potentially fraudulent ATM withdrawal. Kafka is great for performing analysis on a massive amount of data in real time. By building your notification and alerting services around Kafka, you can deliver notifications and alerts to your end-users as soon as relevant events take place.

Connecting your Kafka pipeline to end-user devices

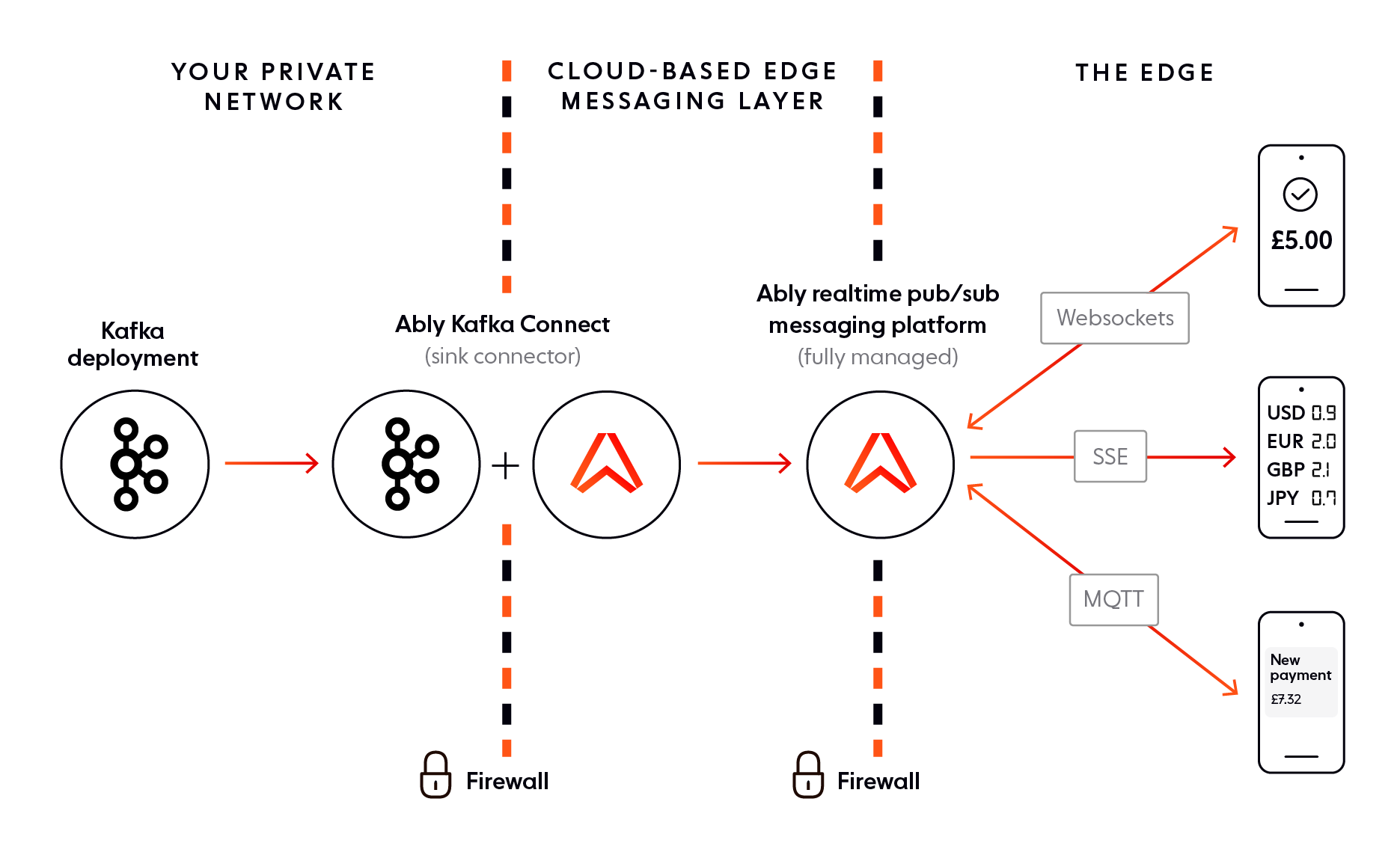

Kafka is a crucial component to having an event-driven, time-ordered, highly available, and fault-tolerant space of data. A key thing to bear in mind - Kafka is designed to be used within a secure network (often on-prem), enabling streams of data to flow between microservices, databases, or even monoliths within your backend ecosystem.

However, Kafka is not built to distribute events to consumers across network boundaries. So, the question is, how do you leverage your Kafka pipeline to deliver data to the end-users of your banking app?

The solution is to use Kafka in combination with an intelligent Internet-facing messaging layer optimized for last-mile delivery. Much like Kafka, this messaging layer should be event-driven and provide dependable messaging guarantees. After all, the banking sector operates with time-sensitive and mission-critical data, which is most valuable when it reaches users in real time. For example, think of fraud - whenever potentially fraudulent transactions occur, this information needs to be brought to your users’ attention immediately.

You could arguably build your very own Internet-facing messaging layer to stream data from Kafka to client devices. Ideally, this messaging layer should provide the same level of guarantees and display similar characteristics to Kafka (data integrity, durability, high availability, etc.) You don’t want to degrade the overall dependability of your system by pairing Kafka with a less reliable Internet-facing messaging layer.

However, developing your proprietary solution is not always a viable option — architecting and maintaining a dependable Internet-facing messaging layer is a massive, complex, and costly undertaking. It’s often more convenient and less risky to use an established existing solution.

Use case: mobile banking app with Kafka and Ably

Ably is an enterprise-grade far-edge pub/sub messaging platform. In a way, Ably is the cross-firewall equivalent of your backend Kafka pipeline. Ably matches, enhances, and complements Kafka’s capabilities. Our platform offers a simple, dependable, flexible, and secure way to distribute events (from Kafka and many other systems) across firewalls to end-user devices at scale over a global edge network, at consistently low latencies - without any need for you to manage or scale infrastructure.

To demonstrate how simple it is to use Kafka with Ably, here’s an example of how data is consumed from Kafka and published to Ably:

for (SinkRecord r: records) {

try {

Message message = new Message("sink", r.value());

message.id = String.format("%d:%d:%d", r.topic().hashCode(), r.kafkaPartition(), r.kafkaOffset());

JsonUtilsObject kafkaExtras = this.kafkaExtras(r);

if (kafkaExtras.toJson().size() > 0) {

message.extras = new MessageExtras(JsonUtils.object().add("kafka", kafkaExtras).toJson());

}

this.channel.publish(message);

} catch (AblyException e) {

/* The ably client attempts retries itself, so if we do have to handle an exception here, we can assume that it is not retryably */

throw new ConnectException("ably client failed to publish", e);

}

}

And here’s how clients connect to Ably to consume data:

AblyRealtime ably = new AblyRealtime("ABLY_API_KEY");

Channel channel = ably.channels.get("test-channel");

/* Subscribe to messages on channel */

MessageListener listener;

listener = new MessageListener() {

@Override

public void onMessage(Message message) {

System.out.print(message.data);

}};

};

channel.subscribe(listener);

Note that the code snippet above is just an example. Ably provides 25+ client library SDKs, targeting every major web and mobile platform. Regardless of your preferred development environment or language, our SDKs keep things simple by offering a simple, consistent, and intuitive API.

The benefits of using Ably alongside Kafka

We will now cover the main benefits you gain by using Ably together with Kafka to build consumer-grade realtime mobile banking apps.

Dependability

As we discussed earlier in the article, Kafka displays dependable characteristics that make it desirable as the streaming backbone of your realtime banking app: low latency, data integrity guarantees, durability, high availability.

Ably matches, enhances, and extends Kafka’s capabilities across firewalls. As a cloud-based pub/sub messaging platform, Ably is well equipped to provide superior performance, integrity, reliability, and availability guarantees - underpinned by the Four Pillars of Dependability, as we call them. This mathematically modeled approach to system design ensures critical functionality at scale, enabling you to satisfy even the most stringent mobile banking requirements.

Let’s now see precisely what each pillar entails:

- Performance. Predictability of low latencies to provide certainty in uncertain operating conditions. This translates to <50 ms round trip latency for 99th percentile. Find out more about Ably’s performance.

- Integrity. Message ordering, guaranteed delivery, and exactly-once semantics are ensured, from the moment a message is published to Ably, all the way to its delivery to consumers. Find out more about Ably’s integrity guarantees.

- Reliability. Fault tolerance at regional and global levels so we can survive multiple failures without outages. 99.999999% message survivability for instance and datacenter failure. Find out more about Ably’s reliability.

- Availability. Ably is meticulously designed to be elastic and highly available. We provide 50% capacity margin for instant surges and a 99.999% uptime SLA. Find out more about Ably’s high availability.

Message routing & security

Since Kafka is designed for machine-to-machine communication within a secure network, it doesn’t provide adequate mechanisms to route and distribute events to client devices across firewalls.

First of all, Kafka works best with a relatively limited number of topics (sharded into partitions), and having a 1:1 mapping between client devices and Kafka partitions is not scalable. Therefore, you will have topics & partitions that store event data about multiple users.

However, when a client device connects to receive transaction information, it only wants and should only be allowed to receive transactions that are relevant for that user/device. But the client doesn’t know the exact partition it needs to receive information from, and Kafka doesn’t have a mechanism that can help with this.

Secondly, even if Kafka was designed to stream data to end-users, allowing client devices to connect directly to topics raises serious security concerns. You don’t want your event streaming and stream processing pipeline to be exposed directly to Internet-facing clients, especially in an industry like banking, which operates with the most sensitive data.

By using Ably, you decouple your backend Kafka deployment from the end-users of your mobile banking app, and gain the following benefits:

- Flexible routing of messages from Kafka topics to Ably channels, which are optimized for delivering data across firewalls to end-user devices.

- Enhanced security, as client devices subscribe to relevant Ably channels instead of subscribing directly to Kafka topics.

Additionally, Ably provides multiple security mechanisms suitable for data distribution across network boundaries, from network-level attack mitigation to individual message-level encryption:

- SSL/TLS and 256-bit AES encryption

- Token-based and basic key-based authentication

- Privilege-based access

- DoS protection & rate limiting

- Compliance with information security standards and laws, such as SOC 2 Type 2, HIPAA, and EU GDPR

Scalable pub/sub messaging optimized for end-user devices

As a backend pub/sub event streaming solution, Kafka works best with a low number of topics, and a limited & predictable number of producers and consumers, within a secure network. Kafka is not optimized to deliver data directly to an unknown, but potentially very high and rapidly changing number of end-user devices over the Internet, which is a volatile and unpredictable source of traffic.

In comparison, Ably’s equivalent of Kafka topics, called channels, are optimized for cross-network communication. Our platform is designed to be dynamically elastic and highly available. Ably can quickly scale horizontally to an unlimited number of channels and millions of concurrent subscribers - with no need to manually provision capacity.

Ably provides rich features that enable you to build seamless and dependable realtime mobile banking experiences:

- Message ordering, delivery, and exactly-once guarantees

- Push notifications

- Message delta compression

- Message history

- Connection recovery with stream resume

- Multi-protocol support - WebSockets, Server-Sent Events, and MQTT

Reduced infrastructure costs and DevOps burden

Building and maintaining a proprietary Internet-facing messaging layer that can extend Kafka beyond the boundaries of your network is difficult, time-consuming, and involves significant DevOps and engineering resources.

By using Ably as the Internet-facing messaging layer, you can focus on developing and innovating your banking app instead of managing and scaling infrastructure. That’s because Ably is a fully managed cloud-based solution, helping you simplify engineering and code complexity, increase development velocity, and speed up time to market.

To learn more about how Ably helps reduce infrastructure, engineering, and DevOps-related costs for organizations spanning various industries, have a look at our case studies.

You might find the Experity case study especially relevant (although not a banking provider, their use case is relatable to what we’re discussing). Experity provides technology solutions for the healthcare industry. One of their core products is a BI dashboard that enables urgent care providers to drive efficiency and enhance patient care in realtime. The data behind Experity’s dashboard is drawn from multiple sources and processed in Kafka.

Experity decided to use Ably as their Internet-facing messaging layer because our platform works seamlessly with Kafka to stream mission-critical and time-sensitive realtime data to end-user devices. Ably extends and enhances Kafka’s guarantees around speed, reliability, integrity, and performance. Furthermore, Ably frees Experity from managing complex realtime infrastructure designed for last-mile delivery. This saves Experity hundreds of hours of development time and enables the organization to channel its resources and focus on building its core offerings.

Banking ecosystem architecture with Kafka and Ably

Before we look at an architecture example with Kafka and Ably, let’s look at how a typical banking ecosystem powered by Kafka might be set up.

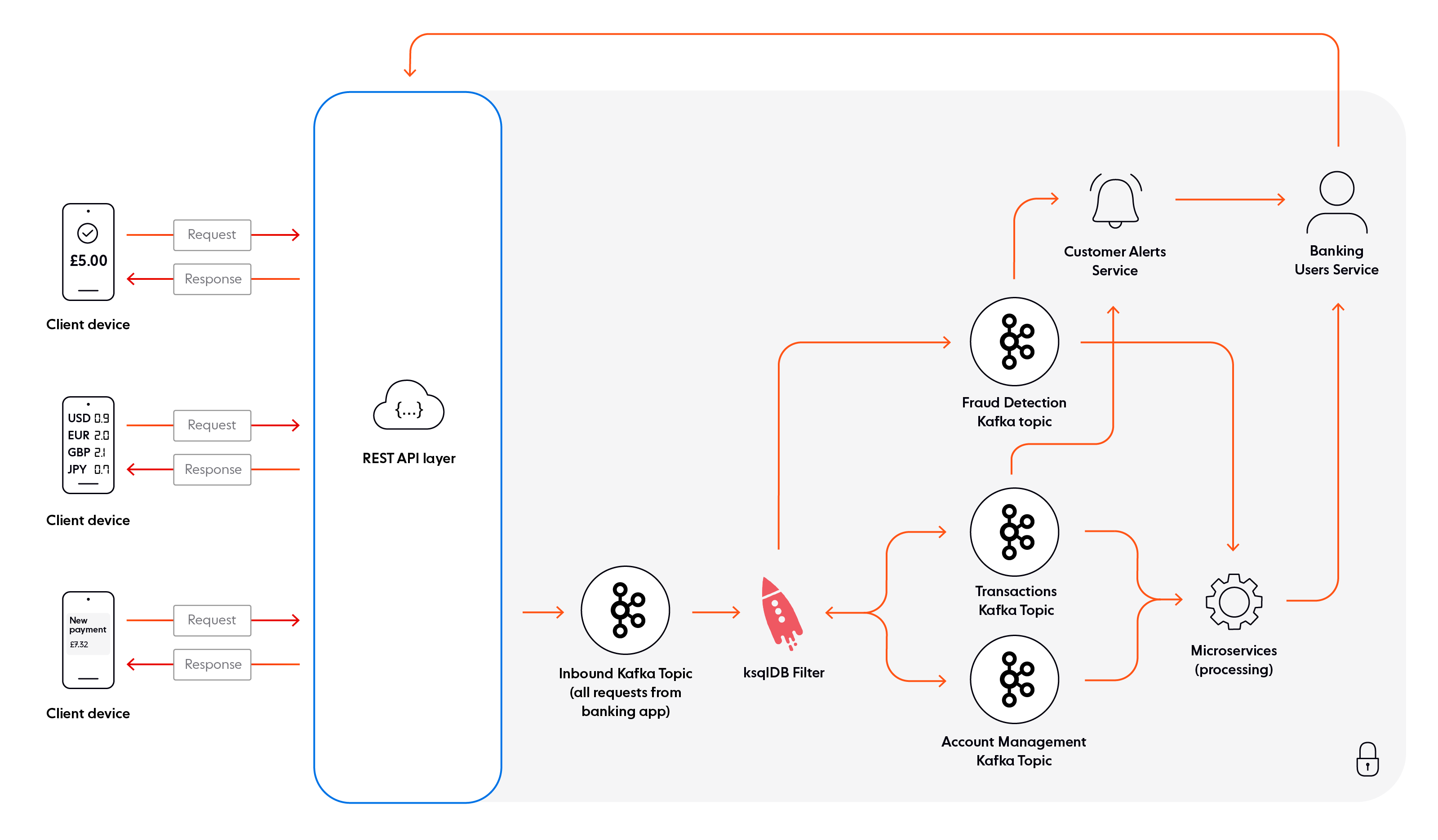

As illustrated above, the backend Kafka-centric pipeline is exposed to end-users through a REST API layer. All incoming requests from mobile banking app users are written to a single inbound Kafka topic. From there, all this data goes to ksqlDB for filtering. Note that ksqlDB is a database purpose-built for stream processing. It allows you to take existing Kafka topics and filter, process, and react to them to create new derived topics.

Once filtered, the generic inbound topic is sharded into other, more specific topics: fraud detection, transactions, and account management. Data from these topics is then consumed and processed by various (micro)services. Any new data is made available to end-users via the REST API layer.

The disadvantage of this architecture is that it’s not event-driven end-to-end, which prevents you from having a banking system that is genuinely realtime. The biggest issue is the use of a RESTful interface to get data into and out of the backend. Banking is an industry where information must reach end-users as fast as possible - think of fraud alerts, for example. Per our diagram, the Kafka pipeline enables you to process data and detect fraudulent transactions in real time, which is great. However, since data is made available to client devices through a REST layer, your customers would only receive fraud alerts when they poll (request) for updates, as opposed to instantly.

Let’s now look at how you might architect your banking system with Kafka and Ably.

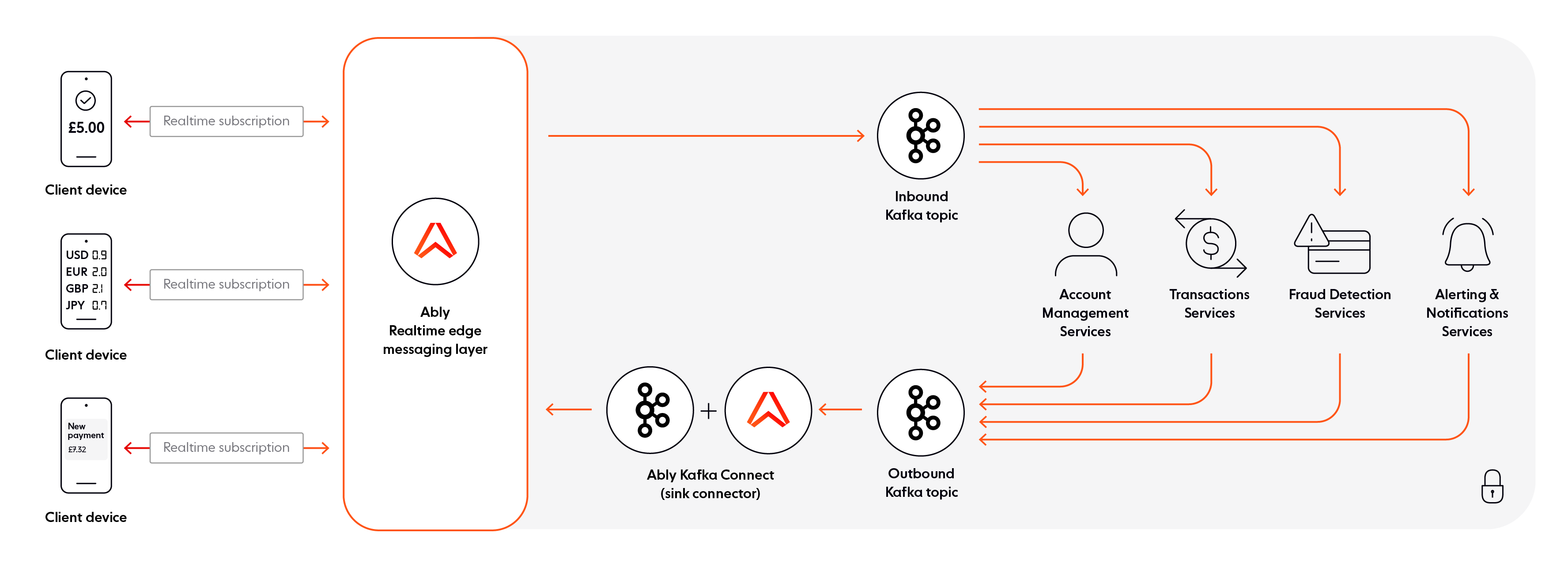

As you can see in the above diagram, the architecture with Kafka and Ably as an Internet-facing messaging layer is simpler overall. There are only two Kafka topics - one for inbound data, and another one for outbound data. Events are consumed from the inbound Kafka topic by various services for processing. Once that’s done, these services write data to an outbound Kafka topic. Note that Ably acts as a realtime message broker, decoupling components, and intermediating the data flow between your banking app users and your backend Kafka pipeline (both inbound and outbound data goes through Ably).

By complementing Kafka with Ably, you obtain an event-driven system that is truly realtime, from your Kafka-driven backend, all the way to client devices. As was the case in the first architecture example, the end-users of your banking app no longer have to request (poll) updates. With Ably as a broker in the middle, client devices are essentially connected directly to your Kafka topics in realtime (with Ably channels as intermediaries).

In addition, Ably enables you to keep things simple by removing all the complexity related to maintaining a dependable Internet-facing messaging layer, such as scaling, securing data in transit, recovering connections, etc. As a fully managed realtime messaging platform, Ably is well equipped to handle the most stringent requirements of last-mile delivery. Our feature-rich pub/sub APIs enable secure realtime communication at scale over a global edge network, at consistently low latencies.

Conclusion

Due to its reliable event streaming and stream processing features, Kafka is commonly used by banking and payment service providers to power their digital systems. Kafka’s event-driven nature unlocks new and improved capabilities, such as realtime fraud detection, and realtime payment processing.

However, Kafka is not built to distribute events to consumers across network boundaries. If you wish to leverage your Kafka pipeline to deliver banking data to end-user devices, you need to use Kafka in combination with an intelligent Internet-facing messaging layer optimized for last-mile delivery.

As an enterprise-grade far-edge realtime messaging platform, Ably seamlessly extends Kafka across firewalls. Our event-driven platform matches and enhances Kafka’s capabilities, offering a simple, dependable, flexible, and secure way to stream Kafka events to end-user devices at scale over a global edge network, at consistently low latencies - without any need for you to manage or scale infrastructure.

If you’d like to find out more about Ably and how our platform works alongside Kafka, enabling you to build dependable realtime apps and critical banking functionality that satisfies even the most stringent requirements, get in touch or sign up for a free account.

Ably and Kafka resources

- Confluent Blog: Building a Dependable Real-Time Betting App with Confluent Cloud and Ably

- Extend Kafka to end-users at the edge with Ably

- How to stream Kafka messages to Internet-facing clients over WebSockets

- Dependable realtime banking with Kafka and Ably

- Building a realtime ticket booking solution with Kafka, FastAPI, and Ably

- Ably Kafka Connector

- Ably’s Kafka Rule

- Introducing the Fully Featured Scalable Chat App, by Ably’s DevRel Team

- Building a realtime chat app with Next.js and Vercel

- Ably pub/sub messaging

- Ably’s Four Pillars of Dependability