Live chat is everywhere. From connecting distributed workers across continents, through providing an efficient customer service channel, to bringing together online communities, chat is central to how we communicate. This demand puts an unusual level of pressure on chat app operators to make sure that every message reaches its destination, every time.

Slack alone delivers 300,000 messages every second and, when there’s an outage, it becomes headline news. Delivering 24/7 service to potentially millions of users around the world makes building a chat experience a serious architectural challenge. Here, we’ll look at the key considerations you need to make when it comes to the architecture of your chat app, the structure and components of that architecture, and some of the technology options that can help support you in building a reliable chat experience.

What is chat app architecture and why is it important?

To people using chat, the entire experience seems to be contained in the app itself. But the chat client that lives on the user’s laptop or mobile device is just the tip of a much larger network of components. For example, the presence service monitors which users are currently connected and how. Another service will look after logging the chat history to a database, while yet another handles searching that chat history.

What job each component does and how those components work together is the chat app architecture. The design of that architecture affects just about everything that the user experiences. Perhaps most importantly, though, it dictates how reliable and how scalable the chat application is.

Key considerations for chat app architecture

As the choices you make will have a long lasting and wide ranging impact, it’s important to look at the specific challenges you’ll face when designing your chat app’s architecture. While this isn’t an exhaustive list, it does focus on the considerations most important for a chat application.

Ability to scale

Unlike email or even SMS, chat is an almost exclusively realtime medium. That sets a high bar for user expectations. Demand ebbs and flows depending on events, such as the launch of a new product, and also according to the routines of your user base.

Combined, that means your chat app architecture must be able to respond quickly to changes in demand. Delayed or missing messages, for example, are a consequence of an overloaded architecture and they are a sure way to dent user confidence. But it would be wasteful and costly to run your application on huge servers just in case demand spikes. Instead, you need to build your architecture so that it can scale up and down as needed and that requires two related architectural choices:

- Microservices architecture: Break your application into multiple small components that each does one thing well and that can operate independently of the others.

- Horizontal scaling: Rather than scaling up with ever larger machines, build your application in such a way that multiple instances of each service can run in parallel. That allows you to add more instances when demand is high and close unneeded instances when demand is low.

Fault tolerance

Lack of capacity is just one reason for application failure. To your end users, though, whether message delivery is failing because your chat backend is over capacity or because it has gone offline altogether, it looks just the same. Further to scale, there are other situations to consider. What if a DDOS attack severely degrades connectivity to the data center, for example? Inevitably, some form of outage will degrade your app’s performance or take it offline entirely. And downtime is costly.

Creating a horizontally scalable microservices architecture can feed into improving your application’s ability to withstand faults. At the heart of enabling that horizontal scalability is the idea that a single service can exist across multiple servers or virtual machines (VM). It’s not a huge leap to use that as a way to overcome many causes of downtime. If a single VM suffers a failure and goes offline, others running that same service can pick up the workload. But what if there’s a wider issue that means multiple other instances of that service are also unavailable?

According to research by the Uptime Institute, 43% of significant outages are caused by power outages. Perhaps ironically, with a majority of those due to uninterruptible power supply (UPS) faults. That could mean that an entire data center or cloud region is unavailable at the same time. In such cases, having those services also running in separate cloud regions or datacenters enables you to route around infrastructure outages.

Latency and global reach

Delivering a realtime experience becomes harder as your user base becomes more geographically dispersed and you look to expand your chat app globally. The further someone is from your servers, the greater the latency they’ll experience.

Latency levels at 100ms is the point at which a software experience feels instantaneous. Beyond that, users notice the delay. According to Verizon, the latency between the UK and Sydney, Australia is around 243 ms. And that’s before any further delay caused by processing on your servers or imperfect network conditions.

The good news is that some of the architectural work necessary to enable fault tolerance and horizontal scalability can also set you up for improving latency. In particular, the ability to run multiple copies of the same service lets them be deployed in different geographical locations. However, the bad news is that the work to actively manage a global network of services and routing traffic between them appropriately is substantially more complex than just having one or two failover regions.

That’s where a platform like Ably comes in. With our own global edge network, our realtime platform maintains reliable data synchronization, at scale, between globally distributed chat clients and your chat backend.

Message synchronization and queuing

Today, it's a basic expectation that messages sent while participants are offline are waiting for them when they log on.

To solve this challenge––queuing messages for later delivery and also ensuring all of a user’s messages are available wherever they log-in––requires solutions, such as:

- Logging each user’s stream of messages and then replaying them when their device connects.

- Delivering each message to that user’s chat history in the backend and then sending the client the history when it reconnects.

That makes a database system, one that scales just as well as the rest of your chat app, a core part of your architecture.

Transport mode

The protocol you choose for transporting data between chat client and server will affect the performance and shape of your backend architecture. Each protocol has its pros and cons. For example, choose WebRTC and, under perfect network conditions, communication could be marginally faster than other choices because it relies on UDP. However, as with all UDP traffic, WebRTC offers no delivery or ordering guarantees. As a result, you’ll need to build your own logic to confirm message delivery and enforce message order, as well as to handle retries where needed.

WebSocket, on the other hand, uses TCP at the transport layer. That increases the chance that your messages will be delivered reliably. However, WebSocket is not a silver bullet because it doesn’t provide its own mechanism to automatically recover when connections are terminated.

In circumstances where network conditions are likely to be poor, MQTT offers three levels of message delivery guarantee, with each one adding more protocol overhead and, so, latency:

- Once, not guaranteed

- At least once

- Only once.

Implementing push notifications

Just as your chat users will want to catch up on what they’ve missed, there are some messages that they’ll need to see at the moment they arrive. Mobile OS and web browser push notifications draw the user’s focus back to your chat app when, for example, they are tagged in a message or a keyword they follow is mentioned.

Your application will need a service that tracks which platform is used by each individual and triggers a notification on those services. Many engineering teams choose to work with an intermediary service, such as Ably, to simplify sending push notifications.

The structure of chat architecture

While the fundamentals of chat remain the same from one app to the next, use cases vary enormously. At one extreme was Yo, a “chat” app that allowed people to do one thing: send the message “Yo” to other users. At the other end is WeChat, which has grown from instant messaging to now offering payments, social media, video calling, and even games.

Despite that variety, almost all commercial chat applications share a similar client-service architecture. The variety comes in precisely what happens on both the client and the server sides. For example, while pretty much all modern chat platforms will have a service to store messages, some might use a single relational database, while others use a column store such as Casandra for its ability to model data as a time series, or even something like Apache Kafka. Speaking of which, how messages move around the various services in the backend varies greatly. Message streaming tools, such as Apache Kafka and Ably, distribute messages according to their content, source, and destination. That results in an event driven architecture where the message effectively travels from one service to the next.

7 core components of chat app architecture

Just as the client-server architecture remains a fundamental pattern, the core components in a chat application’s architecture remain largely unchanged even as the app’s audience grows and its functionality becomes more ambitious with features like video calling, file transfers, and integrations with third-party services..

And, although they might come in different guises and combinations, such as a home built auth server vs Okta or an append-only data store vs a document database, there are seven components that make-up a typical chat application architecture. Of course, in a distributed microservices architecture each of these components would themselves be split into many smaller parts but the basic pattern remains the same.

1. Application server

This is the heart of your architecture where the logic specific to your application executes. That could be running code directly on the server. For example, parsing and acting on slash commands, such as Slack’s /search command, or triggering other system functionality such as retrieving an image from the media store.

2. Load balancer

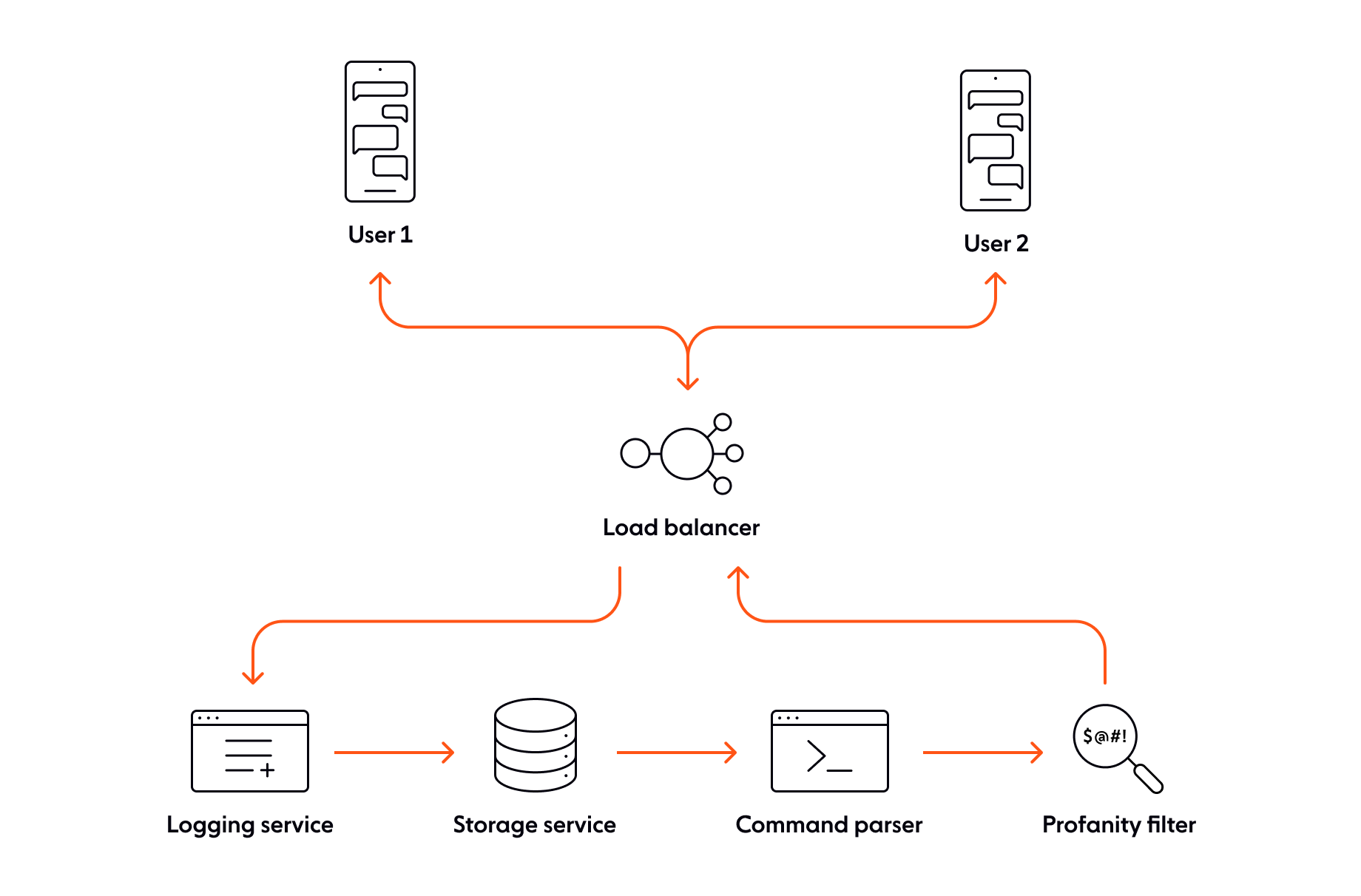

Directing inbound traffic to the right place can quickly become complex. Whether it’s routing to the geographically closest server or distributing load amongst multiple instances in the same data center, your load balancer spreads traffic evenly across your available resources so that you can deliver a consistent service.

3. Streaming event manager

Arguably the hardest part to get right, the server-side of your chat application needs to make sure that messages flow reliably and quickly from one service to the next and then to the destination clients. For example, after arriving at the back end, a new message might first go to the logging service and then onto the data store. From there, a command parsing service might look for and act on user commands, such as searching message history or setting reminders. In practice, a message will pass through multiple services before the system then pushes it out to the relevant subscribers.

It’s a substantial job to coordinate each movement so that messages arrive at and depart from services as efficiently as possible. Whether you build it in-house or use a realtime platform-as-a-service provider such as Ably, the event manager is equivalent to your chat app’s blood system. Constantly moving, carrying data to the right place, powered by instructions from its “heart” in the form of the application server.

4. User authentication and user manager

Research by Verizon shows that 82% of data breaches result from people mishandling passwords. Data from IBM, meanwhile, shows that the average cost of a data breach is $3.86 million. The increasingly central role that chat plays in customer service, replacing email in work environments, and other commercially sensitive situations, your chat’s user authentication plays a key role in guarding against potentially bad actors.

The user authentication component of a chat application provides the initial challenge when a user makes their first connection in a particular session and then continues to verify that each subsequent action is authorized. Depending on your particular security needs, that authentication component calls out to a third-party service such as Auth0 or a library that you implement locally, such as PassportJS. You’ll also need to judge the right trade-off between how certain you want to be of someone’s identity versus reducing user experience friction. For example, multi-factor authentication using a hardware token will give you greater confidence in someone’s identity but will make it harder for users to register with and log in to your chat application.

Once the authentication component verifies the user’s identity, another architectural choice is between token or session based authentication. With token authentication, the auth component will create a token, often in the form of a JSON Web Token, that is then stored in the header of each request between client and server. By verifying the token, the server assigns each request to the relevant logged-in user. Session based auth, on the other hand, sets a session ID cookie on the client side and checks that against each request. There are pros and cons to both methods.

The auth component is also responsible for assigning the permissions each user has, along with other profile information. Typically, the auth component will retrieve those details from the chat applications data store. Permissions include the channels to which they’re subscribed and any special roles they play such as moderator.

5. Presence

At scale, managing user presence is a non-trivial problem. A dedicated presence service keeps track of whether each user is connected, which might not always be clear where someone is logged-in on multiple devices, and how. For example, showing that someone is connected using a mobile device helps set expectations around their ability to respond in detail, whether they’re likely to make autocorrect errors, and so on. Other status information, such as someone being “away” or “busy”, also helps to replicate some of the context that is lost from real world interactions.

6. Media store

Whether it’s original video or meme GIFs, rich media is a core part of many chat experiences. However, storing and transporting those media files can be both costly and hard to scale. One way to reduce costs and improve performance is to maintain a central media store within your own back-end architecture and then hand delivery to a content distribution network (CDN).

7. Database

More than likely, the chat backend will have several data stores to handle different types of data. But there needs to be at least one way of storing and querying data such as the chat messages themselves, user status, status messages, and so on.

Options for delivering realtime chat applications

Whether you’re integrating chat into an existing product or looking to launch a new dedicated chat service, you’re almost spoilt for choice when it comes to choosing how to build your server-side application architecture. Let’s look at the three main options available:

- Build it all in-house: Building everything from scratch using your own engineering team can seem like a tempting option. On paper, it looks like you get full control of the experience you deliver to users and there’s less to pay out in software licensing fees. Seen from a different angle, though, that freedom comes with the responsibility to plan, build, and maintain everything in-house.

- Integrate proven third-party tools: Alternatively, you can retain control without dividing your team’s attention between the competing and varied needs of each specialized part of your chat app’s architecture. Employing a CDN for rich media and a realtime PaaS, such as our own, are two ways you can hand off some of the most challenging aspects of delivering chat.

- Use a white-label chat platform: If your chat needs are relatively straightforward and you are happy to trust the future direction of your functionality to another company’s product team, you can integrate a white-label chat platform into your existing application. This option reduces your engineering costs at the expense of flexibility and ongoing licensing costs.

When you come to choose between these options, consider how well each one can help you deliver on the key considerations we looked at earlier, such as low latency delivery, global reach, high uptime, and rapid scaling. Check out our blog for more on how to choose the right solution for your needs.

Ably’s approach to chat app architecture

At Ably, we understand the importance and challenges of delivering scalable, reliable, feature-rich chat applications – and companies like HubSpot use Ably to serve hundreds of thousands of chat sessions every month.

Whether it’s for customer support, connecting users of a multi-side marketplace, engaging gamers, or delivering on unique requirements such as HIPAA compliant chat, Ably handles the hard infrastructure while giving you the flexibility to tailor the solution you need, including:

- Globally distributed network: Seven core routing data centers and 385 edge accelerations points of presence provide worldwide low latency reach.

- Pub-sub architecture: Ideally suited to chat applications, our pub-sub implementation guarantees precisely once message delivery in order.

- Rich integrations: Work with your existing tools and platforms through webhooks, APIs, and language-specific SDKs.

Build scalable chat applications with Ably

Building your chat application with Ably means benefiting from global infrastructure, ongoing improvements from a specialist realtime engineering team, and the freedom to build the right functionality for your audience.

Get started in just a few minutes:

- Sign-up for your free Ably account.

- Follow our tutorial for building a scalable chat app.

- Then go onto learn more with our clear tutorials on how to build rich chat functionality.