Let's talk about a problem all baseball fans know all too well: you're stuck in traffic or buried under a mountain of work, and there's no way you're going to make it home in time for the game. An average baseball game clocks in around three hours, and sometimes, life doesn't afford you that kind of leisure time. Here, apps promising live game updates become a lifeline for the busy fan. However, that's where we hit a snag. Despite the huge convenience of these "realtime updates", it seems like some of these apps just can't deliver on the real deal.

That’s because realtime updates are a tricky business. When users are counting on these updates, latency and scalability become huge hurdles to clear. Add data integrity into the mix, and you've got a triple threat.

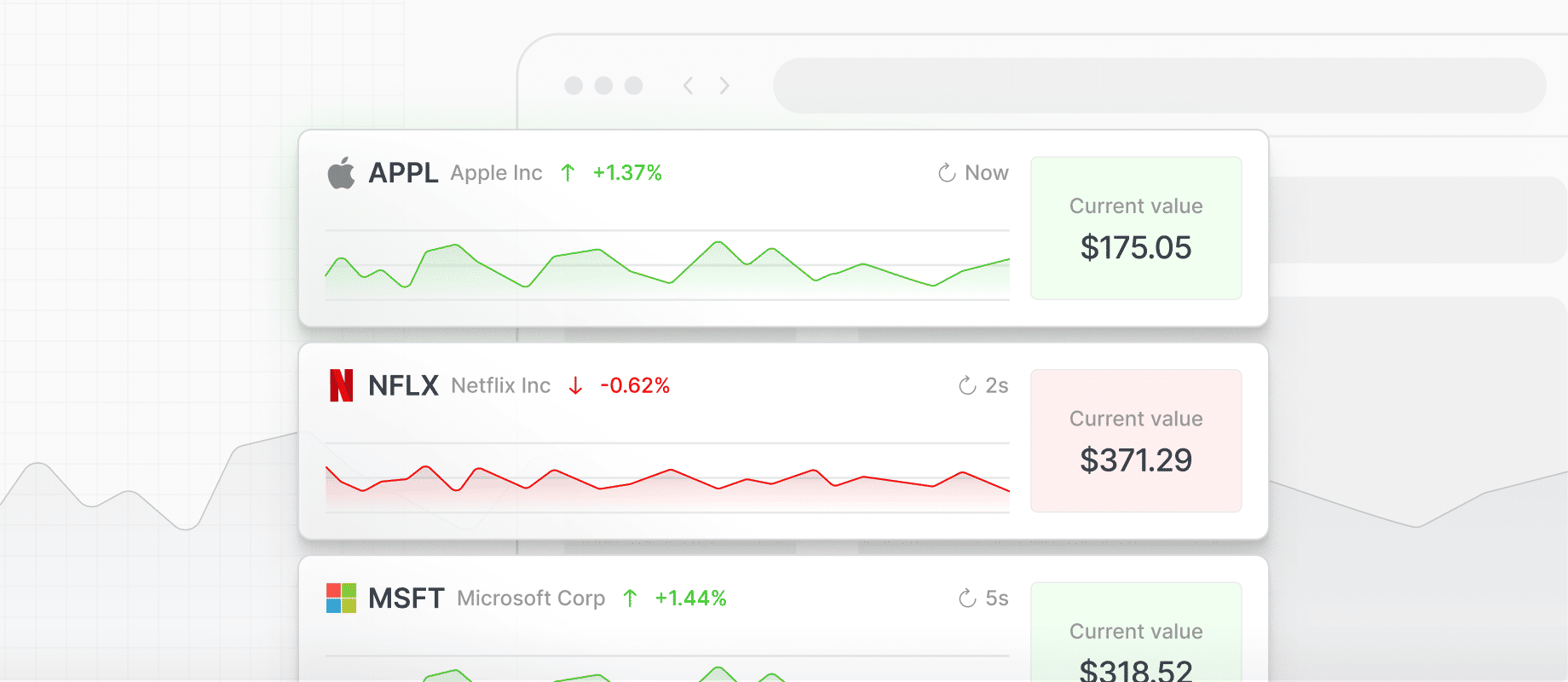

Let's take logistics companies as an example. Those live delivery updates are their bread and butter for keeping customers happy. Slow or late updates? Forget about it - doesn't hold a candle to live tracking. Traders are another case. Instant updates on stock changes can make or break their decisions to buy or sell, while delays or mix-ups could mean missing out on a huge opportunity. Coming back to our dear baseball fan, hearing about a score while it happens? Thrilling! Getting the news after everyone else? What's the point, really?

That’s the hard truth. The value of up-to-the-second data is enormous - but it rapidly decays as the gap grows between data availability and delivery. If you want to build realtime updates into your application, it really does need to be realtime.

In this article, we’ll explain what realtime updates are and why they matter. We’ll go deeper into what successful realtime updates look like, and walk through some of the requirements for effectively providing them.

What are realtime updates and why do they matter?

In the simplest terms, realtime updates are broadcasts. They take data from the backend and fling it out to a bunch of users, all at the same time.

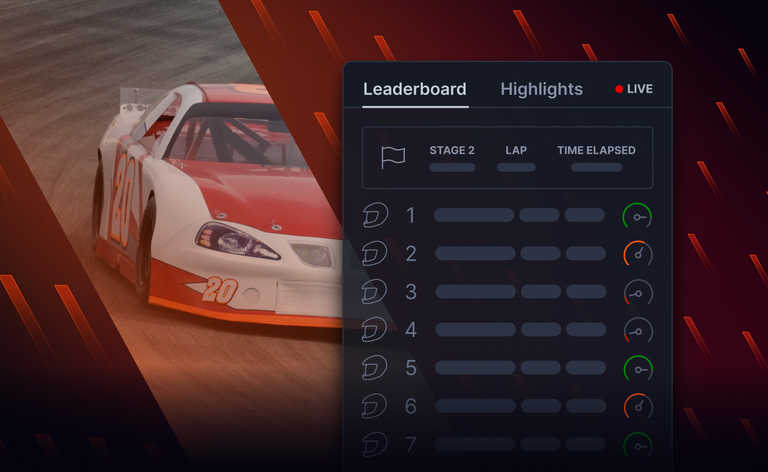

These realtime updates become crucial in situations where timeliness is key. Let's consider a few examples: sports scores, odds changes, live traffic conditions, auction prices, and stock market fluctuations. These are all sources of information where updates are incredibly valuable the moment they happen, but lose their worth quite quickly if they're even a tiny bit late.

Because the value decay is steep and rapid, users won't think twice about ditching an app if the one they’re using either can’t scale to support all the updates that matter or can’t reliably send updates as new data emerges.

What do successful realtime updates look like?

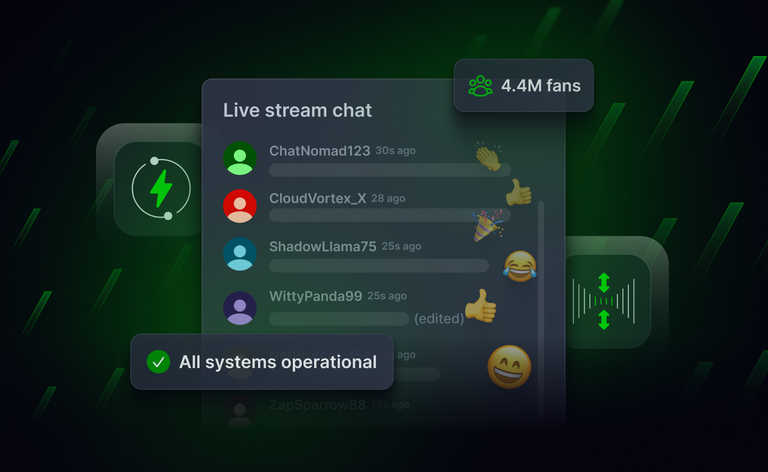

Successful realtime updates deliver new data points reliably and at scale, through an experience that truly feels realtime.

Striking the right balance in these aspects is key because, while the data might remain constant—take "Aaron Judge hit a homerun." for instance—the value of that data can diminish pretty swiftly. A baseball fan not only wants to be up-to-date, but they also want to be able to discuss the latest data with friends – which is impossible until they get that update.

The end-goal is a realtime updates system that can, for example, provide continuous stock updates to customers. It doesn't matter if there are two users or two million, or if there are five stocks being tracked or five thousand. The users shouldn't have to do a thing - just sit back and watch the updates come flooding in.

The three requirements for delivering successful realtime updates

Realtime updates are easy to imagine but hard to implement. At first glance, the problem is simple: quickly send data across machines and across long distances.

But that’s a solved problem. The very first use case for the Internet, after all, was sending a more or less instantaneous message from one side of a room to the other.

The hard part – a technical challenge that’s taken decades following that first message to figure out – is tracking and sending updates at enterprise, global scale, and doing so reliably and consistently over time.

This is where many realtime updates systems have failed. It’s not that hard – and it hasn’t been for a long time – to make an application or website that delivers live updates to 1000 users all based in the same city. But if there are ten times or one hundred times as many users distributed across the world all requesting different updates at the same time, the problem gets exponentially harder. Solving even one problem or building one component requires addressing another.

To deliver on the promise of a realtime experience, your system needs to be reliable, for example. But when you add a stack of servers to improve reliability, another problem emerges: How do you guarantee low latency and synchronize message delivery when your system is replicating data across different data centers? Further, how do you guarantee the messages won’t be repeated if the same message is copied into multiple data centers? Then, as the user base grows, scalability and reliability become issues again and the cycle can repeat.

Latency, data integrity, and scalability - which we dig into below - aren’t novel software challenges but addressing them in a realtime context requires a novel approach. At the core, the problem isn’t handling any one of these challenges - the problem is delivering on all the guarantees of a realtime experience at once.

Low latency

When you’re building a system to deliver realtime updates, you can’t overestimate the value of maintaining low latency. Users – no matter how many are waiting for updates and no matter where they are based – need to receive new data at low latencies.

The typical way of delivering data – having servers communicate directly with devices – just doesn’t work at scale. There’s little room for compromise here: from a development perspective, a difference of 100ms and 300ms is miniscule but from a user perspective, latencies need to be 100ms or lower to be perceived as “instantaneous” or “live.”

Maintaining data integrity

Even if you build a realtime update system that’s operationally reliable and feels “live,” the value of the data and the experience collapses without data integrity.

A realtime update that tells a stock broker about a deal that just closed is valuable. But if that update is followed by the same update, the whole experience is confusing. And if that update is followed by a different update, one that happened before the news about the deal, then the stock broker will be more confused than when they started.

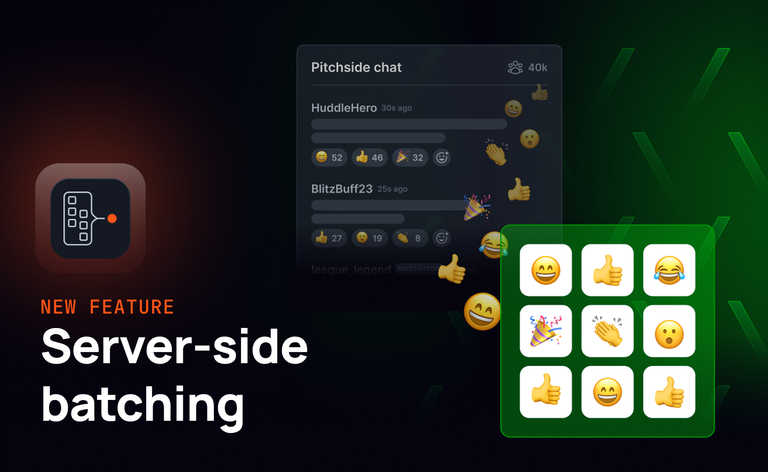

Realtime update systems need to deliver all messages, deliver those messages in the right order, and only publish each update once. Anything else compromises the experience.

The challenge, however, gets much harder the more users you serve and the more distributed they are. And when many of these users are on mobile devices – using sometimes unreliable mobile networks – this gets even harder.

Operating at scale

Scalability is clearly important but if you only think of scalability as a linear problem, as serving 100 users in the early days and serving 10,000 users as your company grows, then you won’t be truly scalable.

True scalability includes the ability to scale with traffic spikes and user surges.

Sometimes surges will be predictable, such as a big upcoming football game, but often, fluctuations will be unpredictable and in those situations, the infrastructure powering realtime updates needs to be able to react to ongoing demand and shift to handle growth in concurrent users.

How to deliver reliable realtime updates at scale

Providing realtime updates is not for the faint of heart.

In many circumstances, services don’t need to be perfectly reliable or scalable. Twitter remained successful through the “fail whale” days, and if a 2FA email from a banking app takes a few minutes to hit the inbox users might grumble - but they won’t switch services.

Realtime updates don’t have the same flexibility. For the use cases we’ve described here, much if not most of the value is dependent on that data being truly realtime and the ability for users to reliably access that data. Even small delays, short downtimes, and imperfect integrity compromise the user experience.

Building an in-house infrastructure for realtime updates is not impossible but it’s difficult and expensive, requiring a steep upfront investment, long-term maintenance, and careful consideration of how each component of the infrastructure affects another. Even experienced engineers can struggle in this environment because these already difficult challenges tend to cascade and compound.

In our State of Serverless Websocket Infrastructure report, for example, we found that it typically takes companies 10.2 person-months to build a realtime infrastructure project. Worse, however, is that this long timeline isn’t even predictable: 41% of respondents reported missing deadlines and 46% reported that costs ballooned enough during the work that the project’s success was at risk.

That’s why businesses including HubSpot, BlueJeans, and Tennis Australia, rely on Ably. With Ably, companies can deliver realtime experiences without needing to build the infrastructure themselves and without needing to worry about all the edge cases we’ve learned from.

To learn more, read about how you can use Ably’s APIs and SDKs to broadcast realtime data with predictable low latency and guaranteed integrity.

This is the first in a series of four blog posts that look at what it takes to deliver realtime updates to end users. In coming posts, we'll take a closer look at why low latency, data integrity, and elasticity are so important when you're trying to deliver realtime updates to end users at scale.