AWS Lambda is a serverless compute service that allows developers to run their code without having to manage the underlying infrastructure. With Lambda, developers can upload their code and the service takes care of scaling, provisioning, and managing the servers required to run the code.

This means developers can focus on writing code and not worry about the underlying infrastructure. AWS Lambda is useful because it allows developers to build and deploy applications quickly and efficiently without worrying about managing servers.

It also allows for more efficient use of resources, as the service automatically scales up or down to meet the demand, which can result in cost savings. Additionally, Lambda integrates with many other AWS services, providing developers with a wide range of tools to build and deploy their applications.

In this blog post, we will dig deeper into Lambdas, why they’re useful, and what role they can play in creating more interactive, realtime experiences across the web.

Lambdas in realtime experiences

Almost every user-facing application these days is expected to have a responsive design for users. A couple of examples would be:

- Chat applications, where users expect to see messages and reactions as they happen.

- Social media where new posts and comments should load seamlessly.

- Games where player positions and actions should be represented as they happen.

- Collaborative spaces such as shared text documents, where other user’s changes should be visible instantly to avoid desynchronization.

Even experiences that have historically been viewed as fairly static, say an online shop, is now expected to be more interactive. Be it an accurate representation of remaining stock, new deals, or even up-to-date comments and reviews.

Lambdas are an incredibly powerful tool in all of these scenarios. For chat for example, usually, there will be some form of manipulation required of the chat message required prior to being shared with other users. Words may need to be filtered, images inserted, formatting applied, etc. Rather than having various backend servers you need to manage and scale up and down, you can instead have a Lambda function set up which expects to receive a message, apply these transformations, and then return it.

The issue is that the message doesn’t need to just go back to the client who has sent a message - it needs to be sent to all relevant clients. In the case of chat rooms, for example, everyone else in a chat room needs to receive the message.

This is where we need some form of broker, which can handle connections, which clients should receive what messages, and so on. This broker can be responsible for receiving messages from clients, passing them to our Lambda function, and then publishing the message to the relevant clients.

Additionally, in order to connect and communicate with end-users in a way that facilitates realtime communication, we need a communication protocol that allows for bidirectional low latency messaging between devices and backend services.

WebSockets + Lambda: The perfect match for realtime experiences

WebSockets are a protocol ideal for this scenario. Not only are they bidirectional, allowing both clients and servers to send messages as they’re ready, but they also are widely supported across modern browsers and backend stacks.

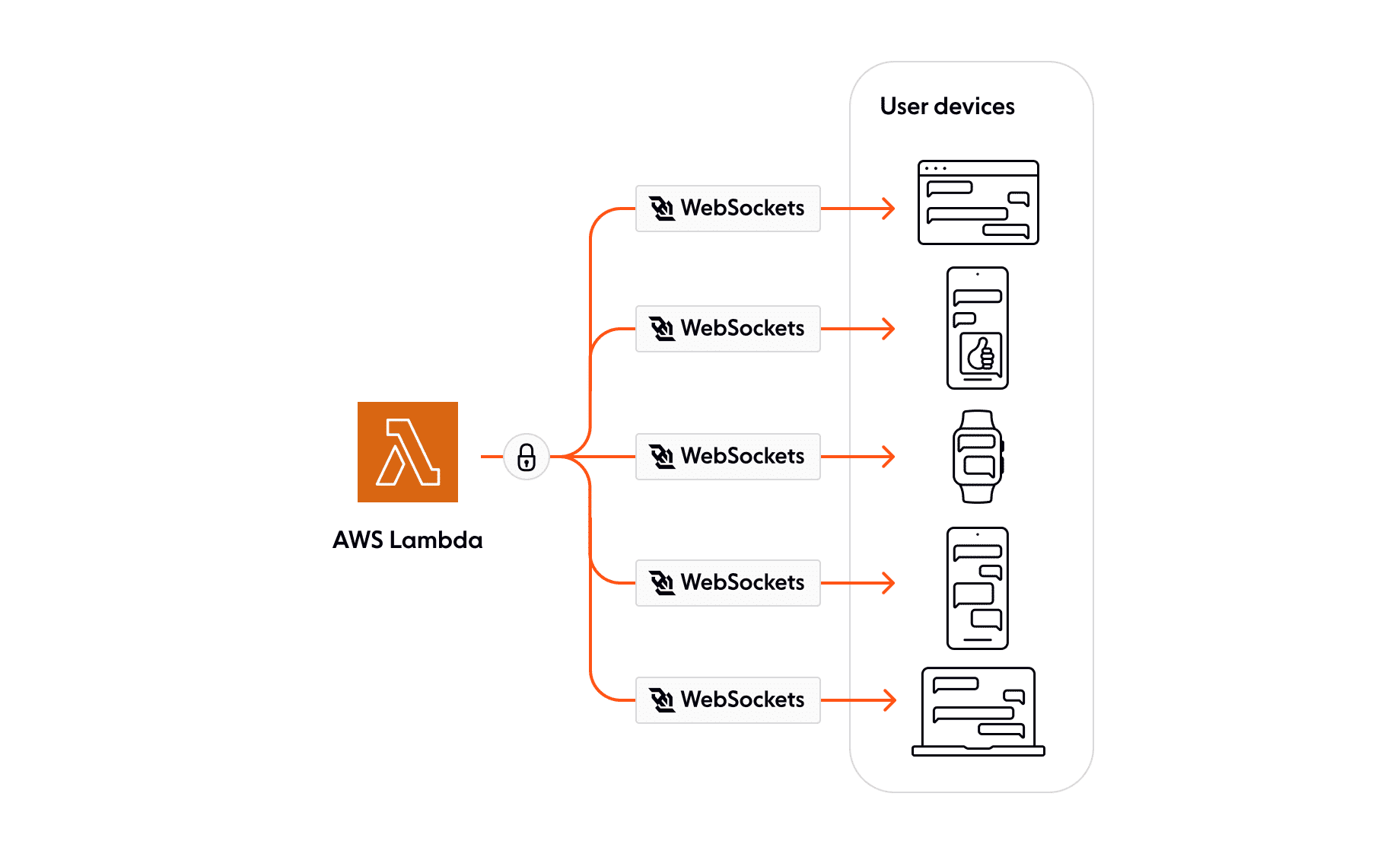

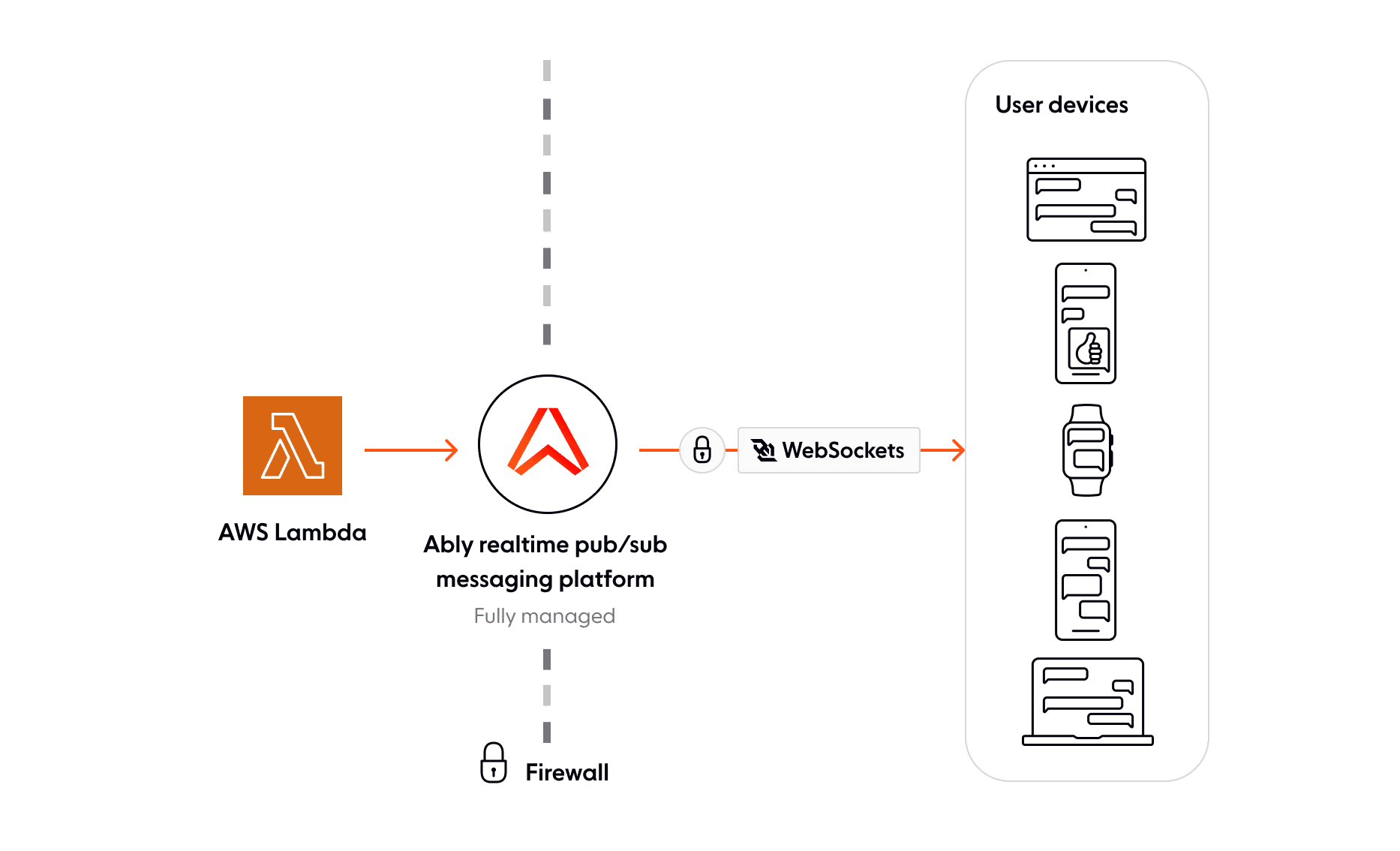

Due to Lambdas being inherently dynamic, however, there needs to be an intermediary service that is capable of handling the fan-out and fan-in of connections, much like a load balancer, and decoupling the connections from the backend Lambdas.

This sort of service, which handles the distribution of messages to many connections, will typically make use of a pattern known as the Publish/Subscribe pattern. This pattern dictates a separation of ‘publishers’ (a client sending a message), and ‘subscribers’ (clients who’ve indicated they wish to receive messages) by a broker server(s). All a client or server needs to know is where to send their message, and which clients who’ve ‘subscribed’ to messages will receive messages from the Pub/Sub broker.

With this pattern, we can ensure our end-user devices are connected to the Pub/Sub broker via WebSockets, ready to receive and send messages. Our Lambda can then be sent messages from the broker.

WebSockets + Lambda example: A chat application

As an example of why these tools together are so powerful, consider a chat application that has a room with 100,000 members in it, which is realistic for some of the larger communities on popular chat apps such as Discord. When a user publishes a message to the chat room, it must:

- Be checked for inappropriate content and have media inserted where appropriate

- Be persisted for future retrieval by users, in a database service such as Aurora

- Be distributed to the 100,000 members in the chat room

Each of these steps needs to be handled by very different tools. Step 1 requires some compute power to be available to run the appropriate checks and changes.

Step 2 requires querying a database. Lambda allows for this computation and database querying to occur scalably.

Step 3 requires a large quantity of compute to handle tracking of who is currently ‘connected’ to a room, and thus needs updates, who’s received which updates, and handling of potential connection issues, all while ensuring that data isn’t duplicated, or even worse silently never makes it. Additionally, the ability to resume a connection from clients wanting chat updates and retrieve missed data is important for avoiding unnecessary load and accessing your database.

Step 3 is the domain of Pub/Sub brokers. By handling the distribution of data it helps simplify the interactions with your own backend services immensely. It helps in providing all the guarantees and functionality you’d eventually expect and need from this communication with client devices. It will also help to remove a lot of the potential load by providing tooling to effectively act as a cache for realtime data.

Demo: Making a chat app with Lambdas and WebSockets

To demonstrate these patterns, we’ll look to create a basic chat application. We’ll use an AWS Lambda function, which will be responsible for applying a filter to messages sent to it. We will be using WebSockets for communicating between clients and a Pub/Sub broker, which will be responsible for interfacing them with Lambdas. As our Pub/Sub broker we’ll make use of Ably, a realtime messaging broker intended for handling massive scale, which will be responsible for handling the fan-in and fan-out of data over WebSockets to end-users.

Creating an AWS account

The first thing you’ll need to do is make sure you have an AWS account with which you can create our Lambda instance.

If you don’t have an AWS account, create a free one here.

Lambda

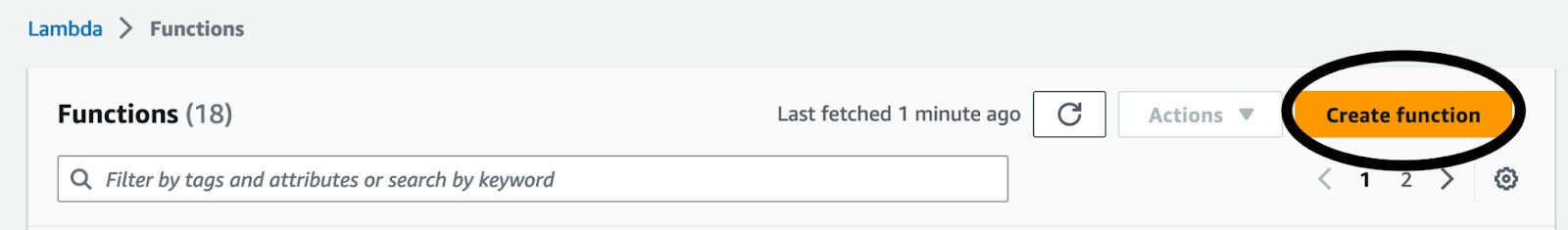

With your AWS account created, go to the Lambda functions page. Click the ‘Create function’ button.

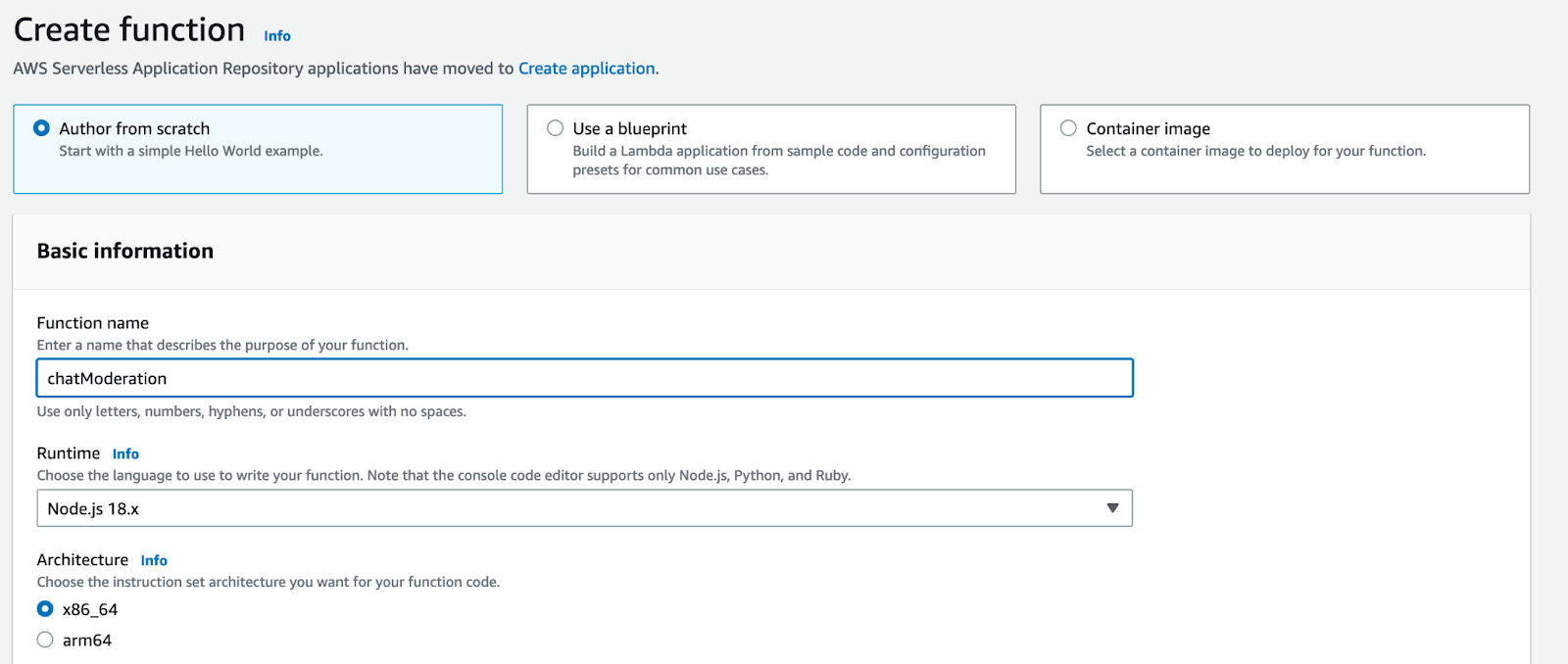

On the creation page, make sure you have ‘Author from scratch’ selected, and call the function something like ‘chatModeration’. Once that’s done, select ‘Create function’ at the bottom of the page.

You should be redirected to the overview page of your new function. In the code section on this page, put in the following code:

// We'll need the Node.js HTTPS module to post a response back to Ably

import https from "https";

// -----------------------------------------

const ablyApiKey = "INSERT_YOUR_ABLY_KEY_HERE";

// -----------------------------------------

export const handler = async (event) => {

let ablyMsg = JSON.parse(event.body);

const data = ablyMsg.messages[0].data;

const BANNED_WORDS = /(potato)|(cabbage)/g;

let filteredMsg = data.replaceAll(BANNED_WORDS, (substring, offset, string) => {

return "*".repeat(substring.length);

});

const channel = "chat:to-clients";

return await postMessage(channel, filteredMsg);

};

async function postMessage(channel, message, callback) {

// Prepare the data to post to Ably:

const data = JSON.stringify({

name: "message",

data: message

});

const options = {

host: "rest.ably.io",

port: 443,

path: `/channels/${channel}/messages`,

method: "POST",

headers: {

Authorization: `Basic ${Buffer.from(ablyApiKey).toString("base64")}`,

"Content-Type": "application/json",

"Content-Length": Buffer.byteLength(data)

}

};

return new Promise((resolve, reject) => {

const req = https.request(options, (res) => {

resolve(JSON.stringify(res.statusCode));

});

// handle the possible errors

req.on("error", (e) => {

reject(e.message);

});

//do the request

req.write(data);

//finish the request

req.end();

});

}

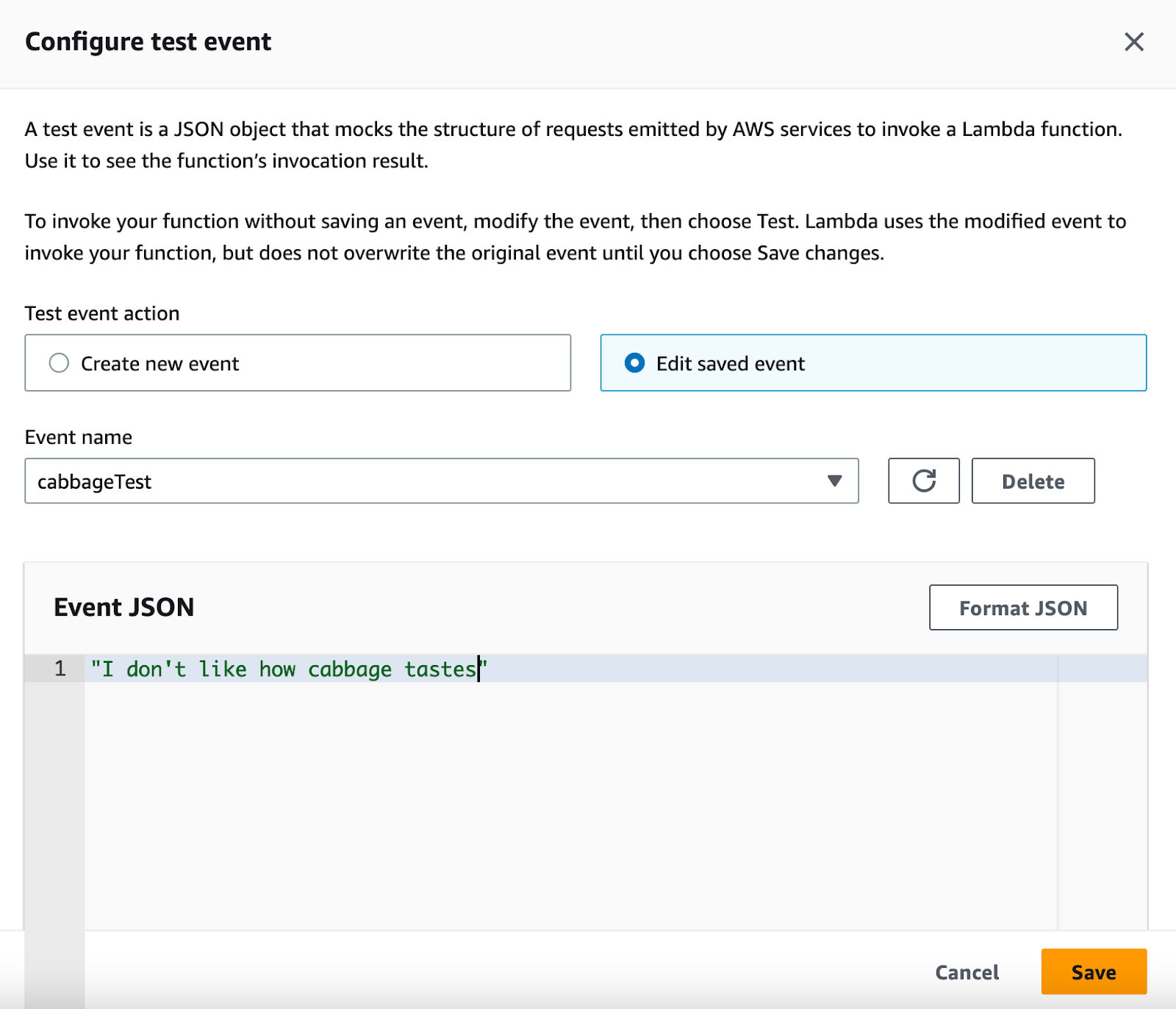

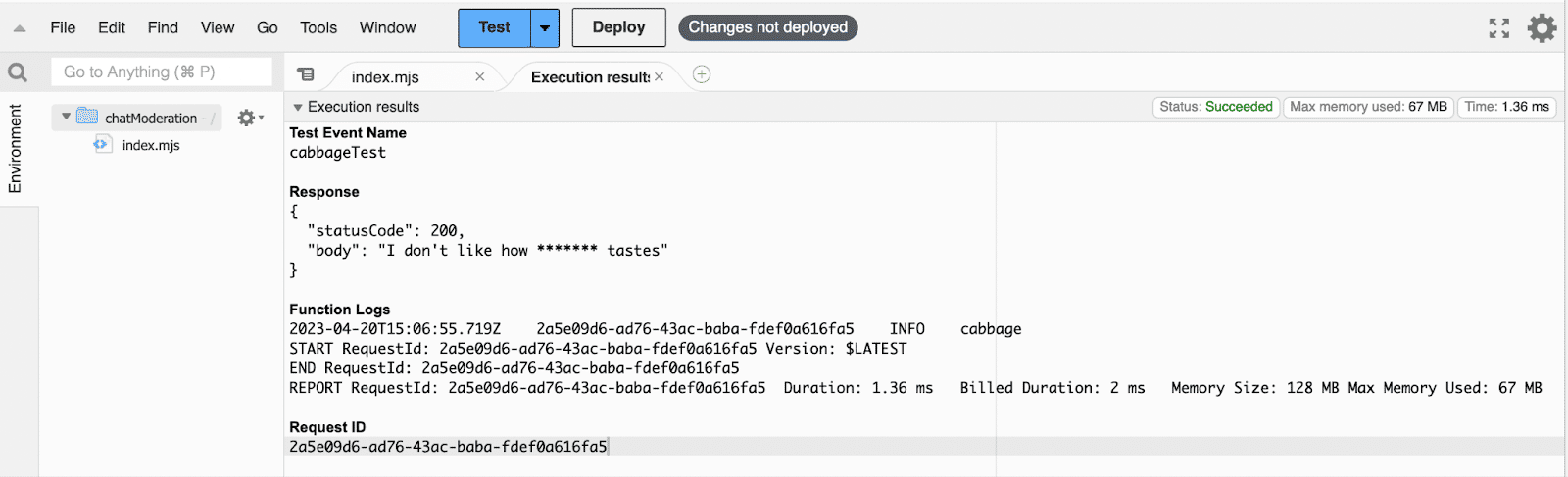

This code will receive messages, apply our filter (checking for ‘potato’ and ‘cabbage’), and star out any occurrences. This new message will be passed back to a different Ably Channel for our clients to receive. It will then return this filtered message back to the caller. Select ‘Deploy’ to make this code live, and then you can run a test with the ‘Test’ button. Specify a name for our test event, such as cabbageTest, and then add a string to represent a message which will be filtered. For example, “I don’t like how cabbage tastes”, and select ‘Save’.

Now, click ‘Test’ again to run our test input. You should see the response includes the word cabbage starred out.

We also want to make an endpoint for Ably to communicate with. In the Configuration tab, select the Function URL button. Select Create Function URL, and for this demo set the Auth type to None.

Setting up the Pub/Sub broker

With our lambda ready to receive messages, we will now need to set up our Pub/Sub message broker. In this demo we’ll be using Ably to handle this for us. You’ll need to create a free account if you don’t have one already.

Once you have an account, you can go to your account dashboard, and create a new app. You can call it anything you like, preferably something easily identifiable such as ‘Lambda Chat app’.

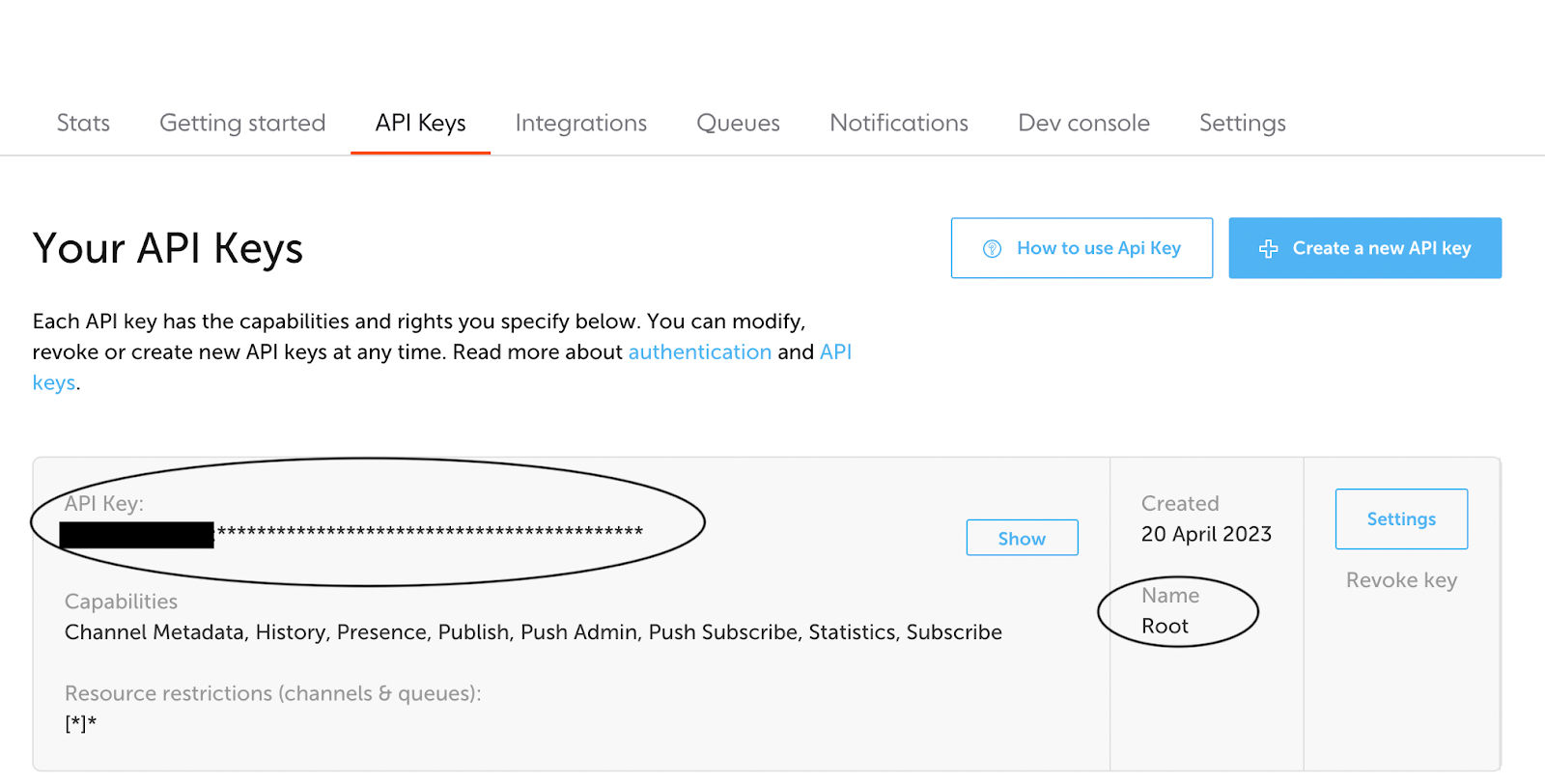

Once you have your new app, you should go to the API Keys tab of the app. API keys are used to authenticate with Ably and this specific app. Make a note of the ‘Root’ key there. We will use this from the Lambda function to reply with our filtered messages. We will also use it to generate Ably Tokens which will be used by our clients to authenticate with Ably.

We make use of Ably Tokens for users rather than the raw API key so that it’s easier to revoke access and control permissions of each user. If we gave untrusted users access to the API key, they’d have full access to everything and we’d have limited control over limiting them.

Make a note of this API key, and make sure to also insert it into your existing Lambda code as the ‘key’ value.

With the API key obtained, next go to the ‘Integrations’ tab. This is where we can easily establish certain endpoints for messages published into our Ably Broker, such as our Lambda function.

On this page, select ‘New integration rule’, ‘Webhook’, then ‘Webhook’ again. On this setup page, paste in the URL generated for your Function URL, and set the Channel FIlter to ‘chat:from-clients.

After creating this Rule, any messages which go into the chat:from-clients channel will be sent on to our new Lambda.

Creating the client-side chat app

Now we need a chat app for our users to use. We will run this locally to keep it simple. We’ll make it an Express server, with a single page for our users to chat on.

Create a new folder for this app locally, and run `npm init` inside it. Call it whatever you want, and set the entry point to ‘server.js’.

Next, run npm install ably express dotenv to get both Ably and Express available.

We’re using dotenv to allow us to use a .env file to hold our API key. Create a ‘.env’ file in the base of the directory, and add API_KEY=YOUR_ABLY_API_KEY, replacing YOUR_ABLY_API_KEY with your Ably API key.

Create the server.js file at the base of your directory, and fill it with the following:

const Ably = require("ably");

const express = require("express");

const app = express();

require("dotenv").config();

// Ably

const FROM_CLIENT_CHANNEL_NAME = "chat:from-clients";

const TO_CLIENT_CHANNEL_NAME = "chat:to-clients";

const API_KEY = process.env.ABLY_API_KEY;

// Use Ably to listen and send updates to users

const realtime = new Ably.Realtime({ key: API_KEY });

/* Express */

const port = 3000;

/* Server */

app.use(express.static("public"));

// Issue token requests to clients sending a request to the /auth endpoint

app.get("/auth", async (req, res) => {

let tokenParams = {

capability: {},

clientId: uuidv4()

};

tokenParams.capability[`${FROM_CLIENT_CHANNEL_NAME}`] = ["publish"];

tokenParams.capability[`${TO_CLIENT_CHANNEL_NAME}`] = ["subscribe"];

console.log("Sending signed token request:", JSON.stringify(tokenParams));

realtime.auth.createTokenRequest(tokenParams, (err, tokenRequest) => {

if (err) {

res.status(500).send(`Error requesting token: ${JSON.stringify(err)}`);

} else {

res.setHeader("Content-Type", "application/json");

res.send(JSON.stringify(tokenRequest));

}

});

});

app.listen(port, () => {

console.log(`Listening on port ${port}`);

});

function uuidv4() {

return (

"comp-" +

"xxxxxxxx-xxxx-4xxx-yxxx-xxxxxxxxxxxx".replace(/[xy]/g, function (c) {

var r = (Math.random() * 16) | 0,

v = c == "x" ? r : (r & 0x3) | 0x8;

return v.toString(16);

})

);

}

Here, we’re creating two endpoints for our express app effectively. One will be content in a ‘public’ folder, and the other is an /auth endpoint, which is used to generate Ably Tokens for our clients to use. It’s important for clients to only ever make use of Tokens, rather than an API key, so as to ensure they’re limited to only specific Channels and actions, as well as have revocable access. They’re also given a random unique ID to identify them.

As we still need our main app page for users to use for chatting, create a folder called ‘public’, and create a file index.html in it. Add the following to that file:

This makes use of an existing Ably Chat widget to make the UI elements simple. We set the channel the client is subscribed to to chat:to-clients, and the channel we are publishing to as chat:from-clients.

Running the server

Now we’ve written our code, we can run the server:

npm run start

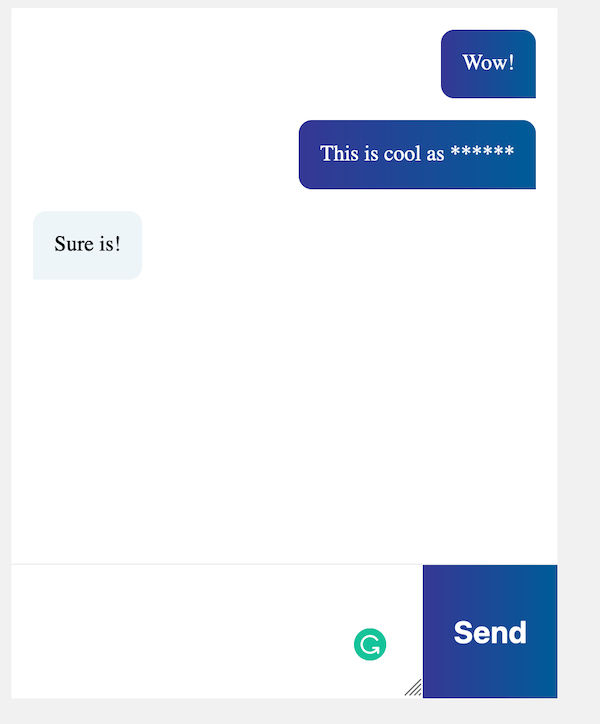

The server will be accessible from port 3000. If you load it up, you should find yourself able to talk between clients.

Conclusion

With Lambdas we have the perfect backend for processing data. WebSockets allow for reliable communication between clients. These in combination with Ably for handling communication with devices and the backend mean that we have a fully scalable solution for any form of problem which requires realtime interactions.

In this case we’ve demonstrated the basics of a chat application, but there’s a lot more that can be done even just for chat. Things such as typing indicators, multiple chat rooms, and media insertion are all features that are expected in realtime chat applications, which make use of the same base model. Data can be communicated through a solution such as Ably to indicate typing, our backend Lambda functions apply some form of computation on the data.

You can find all the code used in this guide on GitHub.