This is one of a series of posts that explain Ably’s four pillars of dependability. The four pillars project at Ably is about making concrete, objectively verifiable, statements about the technical characteristics of the service. We aim to ensure that our claims about service performance are expressed clearly in terms of explicit metrics, and we explain in technical terms how those performance levels are met.

In this post, we will look at scalability. This refers to the ability of a system to change in size or scale, usually to be able to handle workloads that vary in size. In particular, we’ll be looking at how Ably’s platform achieves scalability, and how, as a result, there’s no effective ceiling on the scale of applications that can be supported.

Why does scalability matter?

Obviously, a service must be able to handle the scale demands of any application using it; for example, if the application has to support a very large volume of users simultaneously, or a large volume of messages, or has significant scale on some other dimension. Even if the application developer doesn’t have those scale needs right now, they will usually want to develop the application to be capable of future scale without the need for redesign.

New applications that are successful in today’s massively-connected world see significant rates of adoption, and generate requirements for scale that would be impossible to keep up with if they were not implemented, from the outset, on scalable platforms. Even just a few years ago, there were only a handful of online applications and services that had millions of simultaneous users; but now, since nearly every aspect of commerce and entertainment has moved into an online and connected experience, mass scale is a basic expectation of every application. Virality means that applications can go from launch to millions of users in days, instead of years. Scalable infrastructure is an essential element of any application that aspires to be successful by contemporary standards.

However, scalable platforms aren’t only important to applications that have specific scale requirements; scalability is intrinsic to the design of modern cloud services. The promise of these “serverless” platforms is that a service provider takes care of managing backend infrastructure, so that product and engineering teams can focus on delivering their product and its specific business value instead of having to commit effort to infrastructure and operations.

At first sight, it would be easy to assume that serverless providers are simply doing the same job that in-house SRE teams would otherwise be doing - provisioning infrastructure, managing capacity and availability, monitoring, etc. - but the reality is that serverless infrastructure is completely different in nature from the in-house managed infrastructure that came before it.

The significance of the move to serverless is that it isn’t simply moving responsibility for management to a service provider - it’s about moving responsibility for managing scale to the platform itself. The technology takes over what the SRE team did before. Instead of having to project and plan for compute resources, the platform is designed so that planning isn’t needed; it is inherently scalable. It is elastic so that it scales automatically in response to changing load; the platform provisions the resources on demand, but then distributes the work across that changing infrastructure so that it works efficiently, and with fault tolerance. Individual engineering teams are absolved of the responsibility, not only for managing their infrastructure at scale, but for architecting a system capable of that scale.

This is why scalability matters - along with elasticity and fault tolerance, it is an essential part of modern cloud platform architectures, enabling the problem of managing scale to be moved from the application developer to the platform.

Vertical and horizontal scalability

There are broadly two strategies for achieving scalability:

Vertical scalability is an approach to scaling whereby larger problems are tackled by using larger components. Real-world examples of vertical scaling would be building larger aircraft to carry more passengers, or building apartment blocks with more stories to accommodate more apartments. In computing, vertical scaling typically involves deploying server instances with more CPU cores, or memory, or larger disks. Vertical scaling has served the industry very well - each time the processing power of a CPU doubles, everyone benefits from a vertical scaling effect, and the power of their application also doubles with no additional architectural effort. But it has some limitations, which horizontal scaling mitigates.

Horizontal scalability is the approach to scaling systems by having more components instead of larger ones, going beyond what’s possible through vertical scaling. Eventually you can’t keep making larger aircraft; you have to fly more of them. Cities grow sideways as well as upwards. Vertical scaling can only provide a certain level of scale, and it’s unable to be dynamic (or “elastic”). Horizontal scalability is therefore the only approach that supports the arbitrary and elastic scalability needed by platforms like Ably.

Challenges of horizontally scaling resources

Based on the definition of horizontal scalability above, it’s tempting to imagine that it can be achieved simply by having the ability to replicate some resource. You can transport an arbitrary number of passengers simply by having an unlimited number of aircraft, or a website can handle an arbitrary number of visitors simply by having an unlimited number of web servers.

Of course, it’s not enough just to have unlimited resources - you have to direct requests to those resources effectively. Passengers need to be assigned to flights so they aren’t all trying to board the same aircraft. A website needs a load balancer to evenly distribute requests to the available web servers. The resources will also have other resources as dependencies: the fleet of web servers will need coordinated energy and networking resources, and the aircraft will have to operate in a shared (and therefore managed) airspace.

However, even this is not the full story. The examples above still assume that the replicated resources can operate relatively independently, but in most cases this isn’t the reality. The consumers of a service don’t necessarily consume some independent slice of that service; they’re there to interact with many of them in some way. The resources serving each consumer cannot be fully independent.

At Ably, a key example of this is when there are many subscribers to a single live event. There might be millions of subscribers, and therefore millions of connections. Provided that sufficient capacity exists, and that routing of connections maintains a balanced load across all instances, then the service can absorb an unlimited number of individual connections. But all of the subscribers are subscribed to the same channel, and messages on that channel need to be delivered to every connection. Now the replicated resources need to be interconnected in a way that can handle the delivery of individual messages to an arbitrary number of connections.

How does Ably achieve scalability?

In the context of Ably, we are concerned with a few dimensions of scale:

- Maximum number of channels: this metric is the number of channels that can be used simultaneously by a single application. Ably has the concept of a channel as the unit of message handling; Ably can scale the number of channels dimension horizontally, so there is no technical limit on the number of channels.

- Maximum number of connections: This metric is the number of connections that can exist simultaneously for a single application. It relates to connections that can communicate with one another through one or more shared channels. Ably can scale the number of connections dimension horizontally, so there is no technical limit for the number of connections.

- Maximum message throughput: This metric relates to the total volume of messages Ably can process for a single application at any given moment. This includes the aggregate rate of all inbound messages published into Ably, and the aggregate rate of outbound messages delivered to subscribers and other consumers via integrations. The number of messages in aggregate is also one of the dimensions on which Ably can scale horizontally, so there is no limit on the maximum possible aggregate rate for an application.

In the live event example, Ably scales horizontally on two of these key dimensions, and these each correspond to an independently scalable layer of the architecture:

- Connections: There can be an unlimited number of connected clients, which are handled by a horizontally-scalable layer of “frontend” processes. These processes handle REST requests as well as realtime (WebSocket and Comet) connections.

- Channels: Similarly, there can be an unlimited number of channels, and these are processed in a horizontally-scalable layer of “core” processes.

These each scale independently in each region according to demand. Ably is based on cloud infrastructure, which effectively provides unlimited on-demand compute capacity in each region.

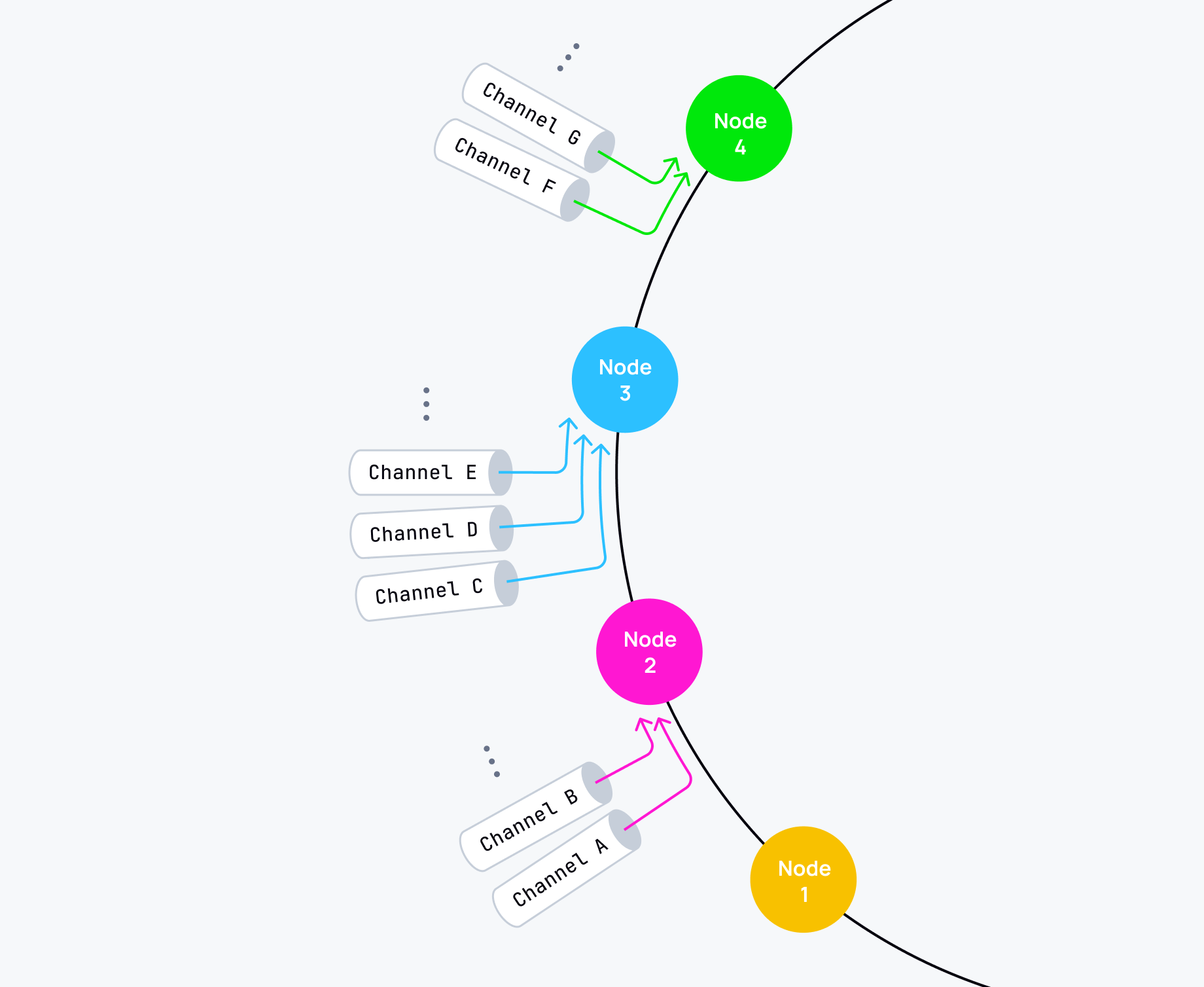

Scaling channels

Channels are the key building block of Ably's service. Internally, the channel is where Ably performs the core processing per message. Message persistence is organized on a per-channel basis, as is integration processing.

Ably achieves horizontal scalability in each of these dimensions by placing load on the cluster in a way that can take advantage of the available capacity. The challenges here aren’t so much about distributing load among a static set of resources; the resources are elastic, so load is placed effectively when there are changes to the available capacity.

Channel processing is stateful, and Ably uses consistent hashing to distribute work across the available core compute capacity. Each compute instance within the core layer has a set of pseudo-randomly generated hashes, and hashing determines the location of any given channel. As a cluster scales, channels relocate to maintain an even load distribution. Any number of channels can exist, as long as sufficient compute capacity is available. Whether there are many lightly-loaded channels or many heavily-loaded ones, scaling and placement strategies ensure capacity is added as required and load is effectively distributed.

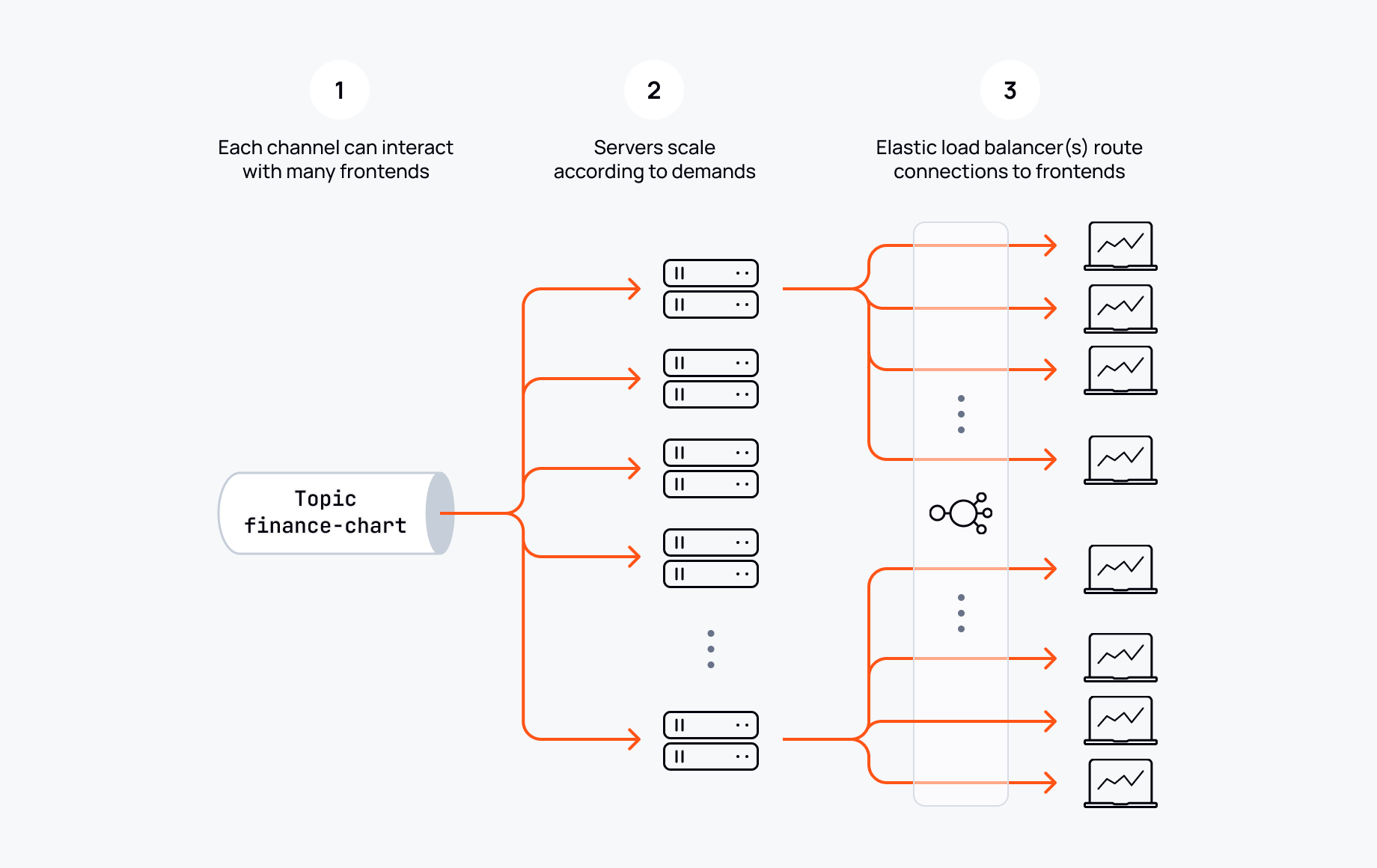

Scaling connections

However, connection processing is stateless; this means that connections can be freely routed to any frontend server without impacting functionality. In this case, Ably uses a load balancer to distribute work and decide where to terminate each connection. The load balancer uses a combination of factors to determine placement, combining simple random allocation with some prioritization based on instantaneous load factors. Ably also performs a low level of background shedding to force the relocation of connections to maintain balanced load. Provided that sufficient capacity exists, and that routing of connections maintains a reasonably balanced load across all instances, then the service can absorb an unlimited number of individual connections.

The main challenge associated with handling many connections is accommodating high-scale fanout: the situation in which a very large number of connections are all attached to a common set of channels. When a message is published on a channel, it must be forwarded to an arbitrary number of client connections. Although core (channel) message processing occurs in a single place, messages need to be routed to the large set of frontend instances that are terminating the set of client connections. The need for fanout in this way is where the simplistic view of “horizontal scalability via replication” breaks down.

Ably addresses this by having separate core and frontend layers. A channel processing a message disseminates the message to all frontends that have connections subscribed to that channel, and the frontend can forward the message to each subscribed connection. A channel will also disseminate processed messages to the corresponding channels in every other region that the channel is active. `Needing to know which frontends have interest in a channel, and which other regions have interest in a channel, then requires an additional state that is needed for a channel.

Having two tiers of fanout within a region imposes a theoretical limit on the fanout achievable. However, the fanout achievable in practice is very high. Suppose, say, that a channel can fanout to 1k frontend instances, and those can each maintain 1 million connections, then the maximum possible fanout is to 1 billion connections. This far exceeds the required number of connections in realistic use cases that need to share use of a single channel. Architectures that do not have tiered fanout in this way are unable to scale on this dimension.

Summary

Scalability is the ability to change to accommodate varying workloads. It is an essential element of any system that is required to handle very large or unpredictable workloads. Horizontal scalability in particular, with automatic scaling, is the way that systems are built to handle unlimited workloads. This is also the key characteristic of serverless infrastructure, which enables developers to hand over not only the operations of server infrastructure, but also the design challenges of architecting for scale. Horizontally scalable platforms form the core of virtually all modern cloud services.

Ably has been designed around these principles, and they apply across multiple dimensions, enabling Ably to handle vast realtime messaging workloads. We continue to invest in advancing the capabilities of the Ably platform in line with growing adoption of realtime features, and ever-increasing scale, of online services and applications.

Feel free to get in touch if you want to discuss how Ably could address the demands of your workload.